News Story

GapFlyt helps aerial robots navigate more like birds and insects

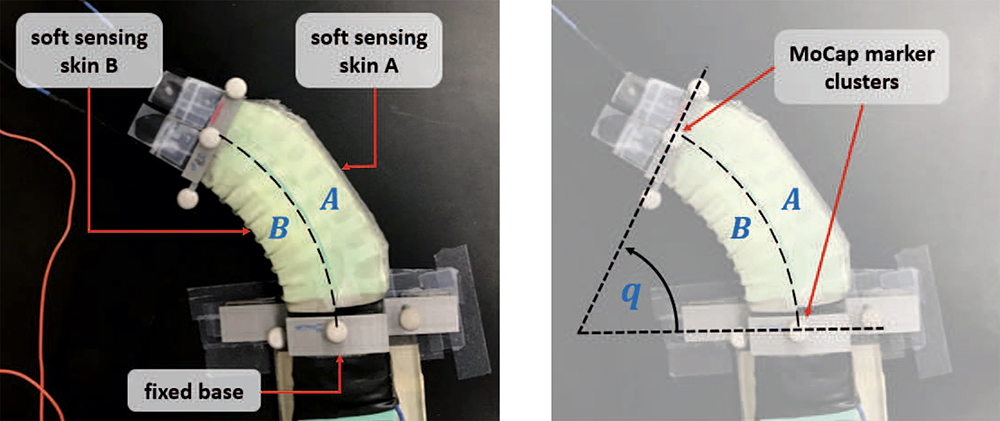

Figure 2 from the paper: components of the environment; inset shows the view of the scene from the quadrotor's camera.

A small flock of birds flies through the gaps in a split-rail fence. Carpenter bees hover briefly around the small holes they have excavated in the side of a wood home, before slipping inside. Birds and insects are especially good at detecting and navigating their way through gaps in solid objects.

New research by the Perception and Robotics Group at the University of Maryland published in IEEE Robotics and Automation Letters will help give quadrotor robot drones some of the same capabilities found in nature.

“GapFlyt: Active Vision Based Minimalist Structure-Less Gap Detection For Quadrotor Flight,” was written by Computer Science Ph.D. students Nitin Sanket, Chahat Deep Singh, and Kanishka Ganguly, along with UMIACS Associate Research Scientist Cornelia Fermüller and ISR-affiliated Professor Yiannis Aloimonos (CS/UMIACS).

Quadrotors and aerial robots currently use passive perception during flight: traditional computer vision algorithms that aim to build a generally applicable 3D reconstruction of the scene. Planning tasks then are constructed and accomplished to allow the quadrotor to demonstrate autonomous behavior. However, these methods are inefficient and are not task driven.

Flying insects and birds, on the other hand, are highly task driven and have been solving the problem of navigation and complex control for ages without building 3D maps.

The researchers designed a bio-inspired minimalist sensorimotor framework for a quadrotor to fly though unknown gaps. The framework requires only a monocular camera and onboard sensing; it does not reconstruct a 3D model of the scene. The researchers successfully tested their approach in real-world experiments with different settings and window shapes, achieving a success rate of 85% at 2.5m/s even with a minimum tolerance of just 5cm.

The paper is believed to be the first to address the problem of gap detection of an unknown shape and location with a monocular camera and onboard sensing. It was published online on June 4, 2018 and will appear in print in the October 2018 issue of the journal.

PDF of the research | Learn more at the Perception and Robotics Group website | Perception and Robotics Group |

Published July 30, 2018