News Story

Real-time remote reconstruction of signals for the Internet of Things

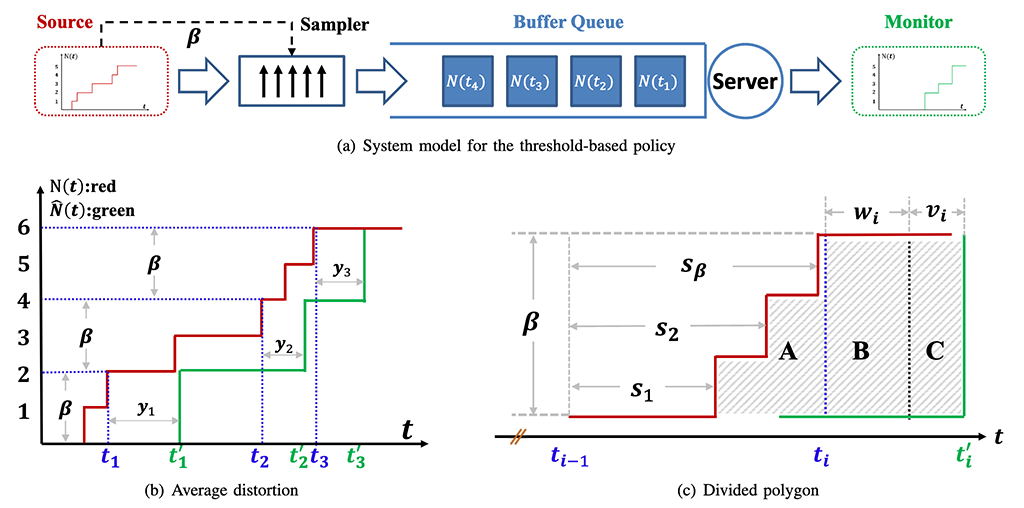

The system model, the overall distortion, and the divided polygons under the threshold-based policy. (Fig. 5 from the paper)

One of the most critical problems for the emerging Internet of Things (IoT) is the real-time remote reconstruction of ongoing signals (or their functions) from a set of measurements that are under-sampled and aged (delayed) in the network. The under-sampled measurements omit the detailed information of the original process, and the aged measurements work against the need for real-time information. The reconstruction contains distortion between the observed signal and the reconstructed signal because of the under-sampling and the queueing delay. Improvements in reconstruction performance are needed, and solving this problem introduces challenges to traditional sampling and signal processing techniques.

In new work published in IEEE Transactions on Information Theory, Distinguished University Professor Anthony Ephremides (ECE/ISR) and Meng Wang and Wei Chen of Tsinghua University in Bejing focus on this problem and how to minimize distortion.

In "Real-Time Reconstruction of a Counting Process through First-Come-First-Serve Queue Systems," the authors address real-time remote reconstruction under three special sampling policies.

They define the average distortion as the average gap between the original signal and the reconstructed signal, adopting it to measure the performance of the reconstruction.

The three policies considered are the uniform sampling method and two non-uniform sampling policies: the threshold-based policy and the zero-wait policy. For each of the policies, they derived the theoretical closed-form expression of the average distortion by dividing the overall distortion area into polygons and analyzing their structures. They discovered the polygons are built up by sub-polygons that account for distortions caused by sampling and queueing delay. The closed-form expressions of the average distortion allow the optimal sampling parameters that achieve the minimum distortion to be found.

These theoretical results help find optimal sampling parameters that balance the tradeoff between the sampling distortion and the distortion caused by queueing, then induce the minimum average distortion.

The authors also introduced interpolation algorithms that can further decrease the average distortion, and derive the upper bound of the performance of these algorithm for uniform sampling policy. Simulation results validate their derivation and at the mean time provide a way to understand the defined average distortion.

Published March 11, 2020