2020

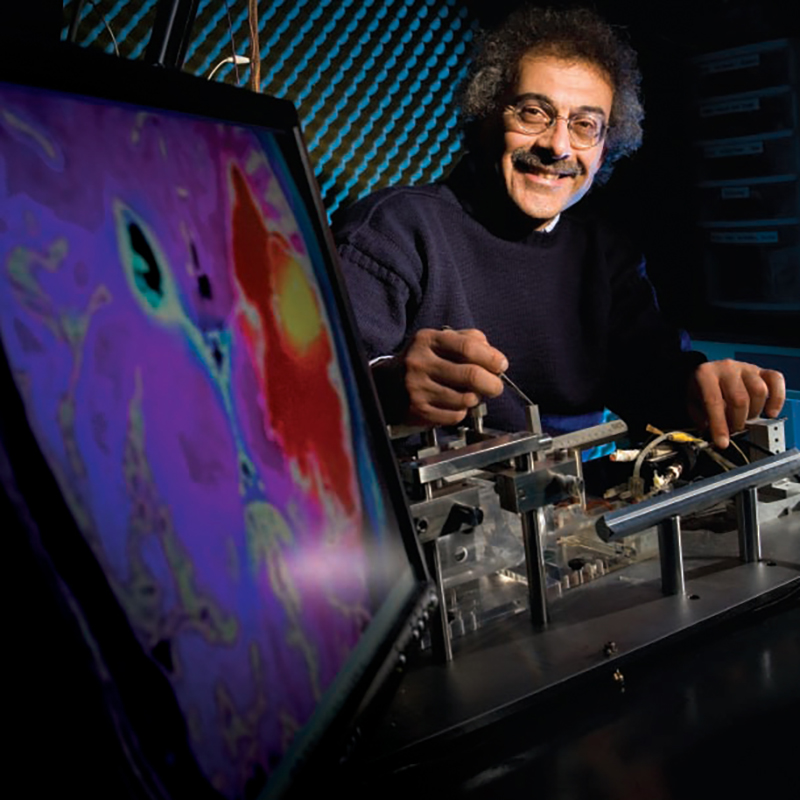

Matthew Evanusa, Cornelia Fermüller, Yiannis Aloimonos

The researchers show that a large, deep layered spiking neural network with dynamical, chaotic activity mimicking the mammalian cortex with biologically-inspired learning rules, such as STDP, is capable of encoding information from temporal data.

arXiv.org

Matthew Evanusa, Snehesh Shrestha, Michelle Girvan, Cornelia Fermüller, Yiannis Aloimonos

Demonstrates the use of a backpropagation hybrid mechanism for parallel reservoir computingwith a meta ring structure and its application on a real-world gesture recognition dataset. This mechanism can be used as an alternative to state of the art recurrent neural networks, LSTMs and GRUs.

arXiv.org

2023

Kate O’Neill, Erin Anderson, Shoutik Mukherjee, Srinivasa Gandu, Sara McEwan, Anton Omelchenko, Ana Rodriguez, Wolfgang Losert, David Meaney, Behtash Babadi, Bonnie Firestein

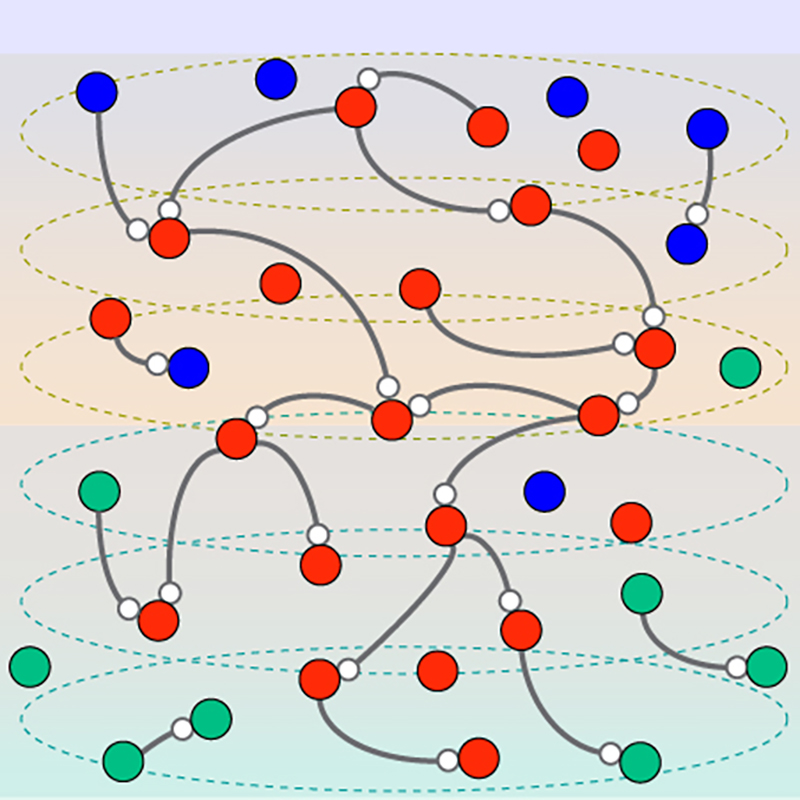

Plasticity and homeostatic mechanisms allow neural networks to maintain proper function while responding to physiological challenges. Despite previous work investigating morphological and synaptic effects of brain-derived neurotrophic factor (BDNF), the most prevalent growth factor in the central nervous system, how exposure to BDNF manifests at the network level remains unknown. Here the researchers report that BDNF treatment affects rodent hippocampal network dynamics during development and recovery from glutamate-induced excitotoxicity in culture. Importantly, these effects are not obvious when traditional activity metrics are used, so we delve more deeply into network organization, functional analyses, and in silico simulations. the authors demonstrate that BDNF partially restores homeostasis by promoting recovery of weak and medium connections after injury. Imaging and computational analyses suggest these effects are caused by changes to inhibitory neurons and connections. From our in silico simulations, we find that BDNF remodels the network by indirectly strengthening weak excitatory synapses after injury. Ultimately, these findings may explain the difficulties encountered in preclinical and clinical trials with BDNF and also offer information for future trials to consider.

Communications Biology, a Nature publication

Ruwanthi Abeysekara, Christopher J. Smalt, I. M. Dushyanthi Karunathilake, Jonathan Z. Simon, Behtash Babadi

Speaker-specific attention decoding from neural recordings to suppress the acoustic background and extract a target speaker in an in-the-wild multi-speaker conversation scenario poses a cornerstone challenge for advanced hearing devices. Despite several recent advances in auditory attention decoding, most existing approaches fail to reach the real-time performance and attention decoding accuracy required by hearing aid devices. In this work, the authors aim to quantify fundamental limits on the performance of auditory attention decoding by establishing and computing the trade-off between accuracy and decision window length.

UMD Simon Lab

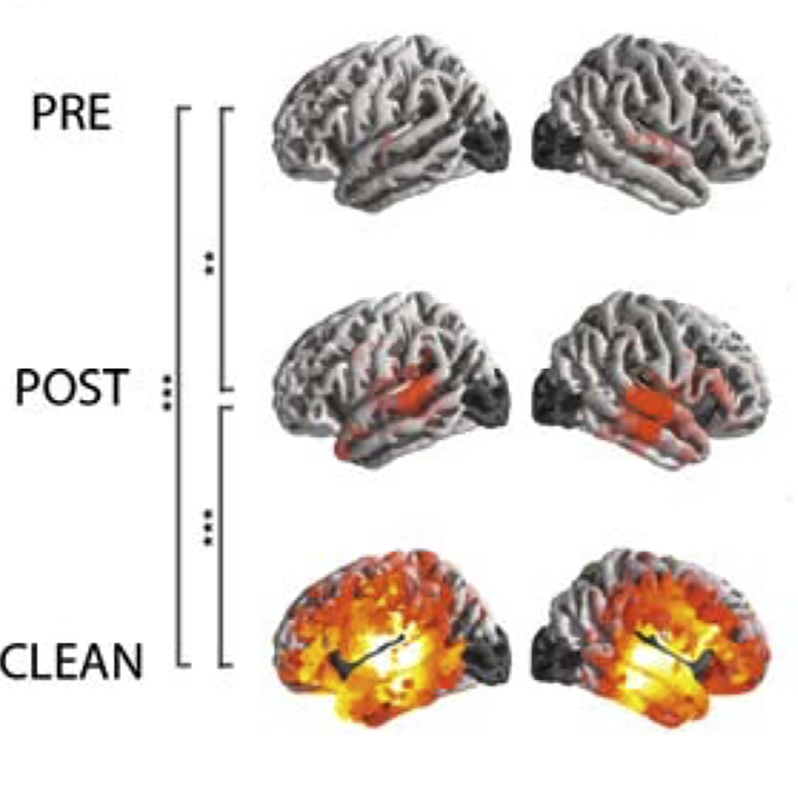

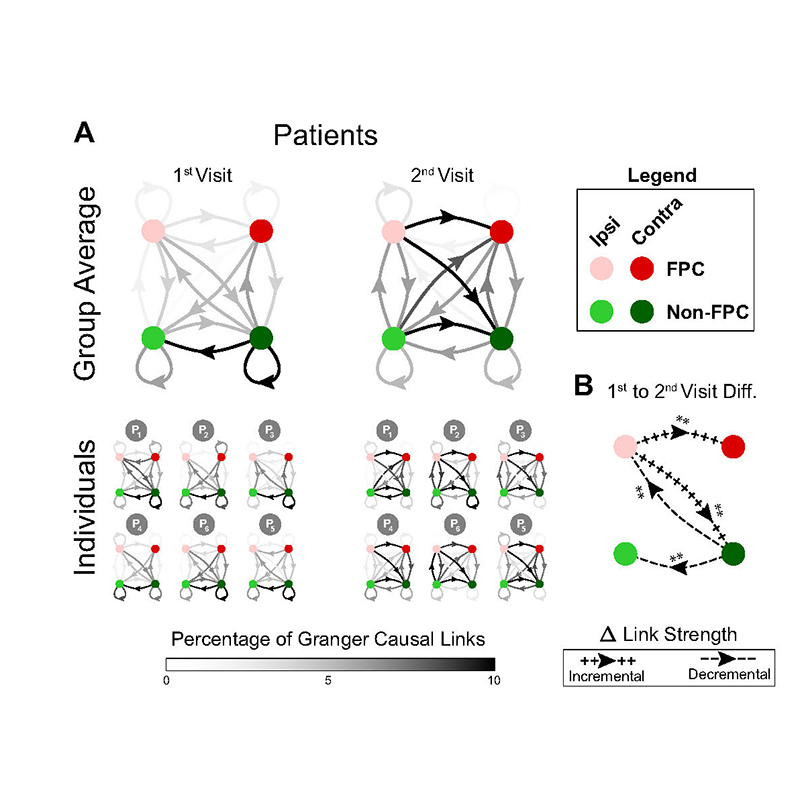

Behrad Soleimani, Isabella Dallasta, Proloy Das, Joshua Kulasingham, Sophia Girgenti, Jonathan Simon, Behtash Babadi, Elisabeth Marsh

This study provides supporting evidence that the neural basis of early post-stroke cognitive dysfunction occurs at the network level, and continued recovery correlates with the evolution of inter-hemispheric connectivity.

Brain Communications

Proloy Das, Behtash Babadi

Granger causality is among the widely used data-driven approaches for causal analysis of time series data with applications in various areas including economics, molecular biology, and neuroscience. Two of the main challenges of this methodology are: 1) over-fitting as a result of limited data duration, and 2) correlated process noise as a confounding factor, both leading to errors in identifying the causal influences. Sparse estimation via the LASSO has successfully addressed these challenges for parameter estimation. However, the classical statistical tests for Granger causality resort to asymptotic analysis of ordinary least squares, which require long data duration to be useful and are not immune to confounding effects. In this work, the authors address this disconnect by introducing a LASSO-based statistic and studying its non-asymptotic properties under the assumption that the true models admit sparse autoregressive representations.

IEEE Transactions on Information Theory

2022

Behrad Soleimani, Proloy Das, I.M. Dushyanthi Karunathilake, Stefanie E. Kuchinsky, Jonathan Z. Simon, Behtash Babadi

Introduces the Network Localized Granger Causality (NLGC) inference paradigm, which models the source dynamics as latent sparse multivariate autoregressive processes and estimates their parameters directly from the MEG measurements, integrated with source localization, and employs the resulting parameter estimates to produce a precise statistical characterization of the detected GC links.

bioRxiv.org

2021

Anuththara Rupasinghe, Nikolas Francis, Ji Liu, Zac Bowen, Patrick Kanold, Behtash Babadi

Proposes a methodology to directly estimate both signal and noise correlations from two-photon imaging observations, without requiring an intermediate step of spike deconvolution.

eLife

2020

Sina Miran, Behtash Babadi, Alessandro Presacco, Jonathan Simon, Michael Fu, Steven Marcus

This research develops efficient algorithms for inferring the parameters of a general class of Gaussian mixture process noise models from noisy and limited observations, and utilize them in extracting the neural dynamics that underlie auditory processing from magnetoencephalography (MEG) data in a cocktail party setting.

PLOS Computational Biology

Anuththara Rupasinghe, Behtash Babadi

Extracting the spectral representations of neural processes that underlie spiking activity is key to understanding how brain rhythms mediate cognitive functions. This work develops a multitaper spectral estimation methodology that can be directly applied to multivariate spiking observations to extract the semi-stationary spectral density of the latent non-stationary processes that govern spiking activity.

IEEE Transactions on Signal Processing

Anuththara Rupasinghe, Behtash Babadi

Proposes an algorithm to directly estimate neuronal correlations from ensemble two-photon imaging data, by integrating techniques from point process modeling and variational Bayesian inference, with no recourse to intermediate spike deconvolution.

2020 54th Annual Conference on Information Sciences and Systems

Proloy Das, Christian Brodbeck, Jonathan Z. Simon, Behtash Babadi

A principled modeling and estimation paradigm for MEG source analysis tailored to extracting the cortical origin of electrophysiological responses to continuous stimuli.

NeuroImage

Behrad Soleimani, Proloy Das, Joshua Kulasingham, Jonathan Z. Simon, Behtash Babadi

The authors consider the problem of determining Granger causal influences among sources that are indirectly observed through low-dimensional and noisy linear projections. They model the source dynamics as sparse vector autoregressive processes and estimate the model parameters directly from the observations, with no recourse to intermediate source localization.

54th Conference on Information Sciences and Systems

2022

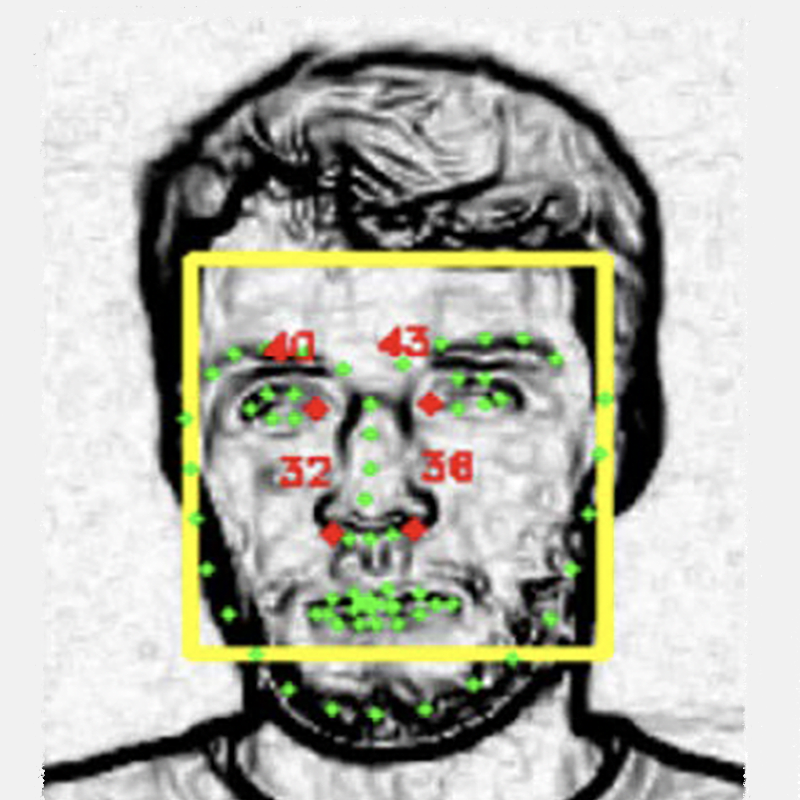

Felix Bartsch, Bevil R. Conway, Daniel Butts

Color vision requires comparing the activity of cone types across visual space. V1 is likely important in carrying out these computations, but establishing the underlying neural mechanisms has been complicated by several issues: (1) cone density is highest at the fovea, where even tiny fixational eye movements can be disruptive for mapping spatial receptive fields; (2) V1 processing is nonlinear; and (3) the integration of spatial and chromatic information likely depends on circuit-level interactions that occur across layers within V1. A further challenge is the lack of theoretical framework for interpreting neurophysiological measurements. We designed two-dimensional spatiochromatic noise stimuli that contain a wide range of spatiochromatic combinations necessary to fit data-driven models to V1 neurons recorded while this stimulus is presented.

Journal of Vision

2021

Ethan Cheng, Daniel Butts

How information from both eyes aligns in the primary visual cortex (V1) depends on the vergence of the eyes and the depth of a given object, resulting in a particular binocular disparity. However, a significant fraction of V1 neurons are binocular but not modulated by binocular disparity, raising the question of how their outputs can be meaningful.

Journal of Vision

Katrina Quinn, Lenka Seillier, Daniel Butts, Hendrikje Nienborg

Feedback in the brain is thought to convey contextual information that underlies our flexibility to perform different tasks. The authors show that although the behavior is spatially selective, using only task-relevant information, modulation by decision-related feedback is spatially unselective.

Nature Communications

2020

Matthew Whiteway, Bruno Averbeck, Daniel Butts

The authors propose a new decoding framework that exploits the low-dimensional structure of neural population variability by removing correlated variability unrelated to the decoded variable, then decoding the resulting denoised activity.

biorXiv.org

2019

Matthew Whiteway, Daniel Butts

Research explores latent variable models for neural recording coordinated activity of large neuron populations in brain function.

Current Opinion in Neurobiology

2023

Gowtham Premananth, Yashish M.Siriwardena, Philip Resnik, Carol Espy-Wilson

This study focuses on how different modalities of human communication can be used to distinguish between healthy controls and subjects with schizophrenia who exhibit strong positive symptoms. The authors developed a multi-modal schizophrenia classification system using audio, video, and text.

archive.org

Kaitlyn Arrow, Philip Resnik, Hanna Michel, Christopher Kitchen, Chen Mo, Shuo Chen, Carol Espy-Wilson, Glen Coppersmith, Colin Frazier & Deanna L. Kelly

Although digital health solutions are increasingly popular in clinical psychiatry, one application that has not been fully explored is the utilization of survey technology to monitor patients outside of the clinic. Supplementing routine care with digital information collected in the “clinical whitespace” between visits could improve care for patients with severe mental illness. This study evaluated the feasibility and validity of using online self-report questionnaires to supplement in-person clinical evaluations in persons with and without psychiatric diagnoses.

Psychiatric Quarterly

2020

Matthew Evanusa, Cornelia Fermüller, Yiannis Aloimonos

The researchers show that a large, deep layered spiking neural network with dynamical, chaotic activity mimicking the mammalian cortex with biologically-inspired learning rules, such as STDP, is capable of encoding information from temporal data.

arXiv.org

Matthew Evanusa, Snehesh Shrestha, Michelle Girvan, Cornelia Fermüller, Yiannis Aloimonos

Demonstrates the use of a backpropagation hybrid mechanism for parallel reservoir computingwith a meta ring structure and its application on a real-world gesture recognition dataset. This mechanism can be used as an alternative to state of the art recurrent neural networks, LSTMs and GRUs.

arXiv.org

2023

Arya Teymourlouei, Joshua Stone, Rodolphe Gentili, James Reggia

The automated classification of a participant’s mental workload based on electroencephalographic (EEG) data is a challenging problem. Recently, network-based approaches have been introduced for this purpose. The authors seek to build on this work by introducing a novel feature extraction method for mental workload classification which uses multiplex networks formed from visibility graphs (VGs). The VG algorithm is an effective method for transforming a time series into a complex network representation.

International Conference on Brain Informatics (2023)

Caitlin Mahon, Brad Hendershot, Christopher Gaskins, Bradley Hatfield, Emma Shaw, Rodolphe Gentili

This study examines the concurrent modulation of gait and Electroencephalography (EEG) cortical dynamics underlying mental workload during split-belt locomotor adaptation, with and without optic flow.

Experimental Brain Research

2023

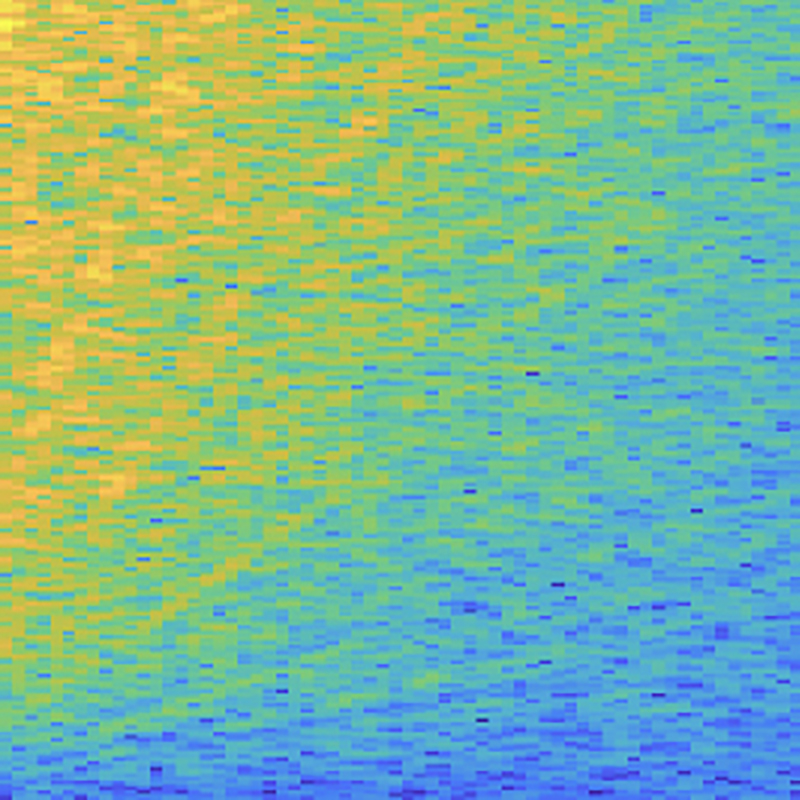

Yuichi Goto, Xuze Zhang, Benjamin Kedem, Shuo Chen

Coherence is a widely used measure to assess linear relationships between time series. However, it fails to capture nonlinear dependencies. To overcome this limitation, this paper introduces the notion of residual spectral density as a higher-order extension of the squared coherence. The method is based on an orthogonal decomposition of time series regression models. The authors propose a test for testing the existence of the residual spectrum and derive its fundamental properties. A numerical study illustrates finite sample performance of the proposed method. An application of the method shows that the residual spectrum can effectively detect brain connectivity.

arXiv.org

2021

Puneet Mathur, Trisha Mittal, Dinesh Manocha

A new approach, AdaGTCN, can identify human reader intent from Electroencephalogram (EEG) and Eye movement (EM) data to help differentiate between normal reading and task-oriented reading.

arXiv.org

2022

Regina Calloway, Michael Johns, Ian Phillips, Valerie Karuzis, Kelsey Dutta, Ed Smith, Shihab Shamma, Matthew Goupell, Stefanie Kuchinsky

Understanding speech in noisy environments can be challenging and requires listeners to accurately segregate a target speaker from irrelevant background noise. In this research, an online SFG task with complex stimuli consisting of a sequence of inharmonic pure-tone chords was administered to 37 young, normal hearing adults, to have a more pure measure of auditory stream segregation that does not rely on linguistic stimuli. Detection of target figure chords consisting of 4, 6, 8, or 10 temporally coherent tones amongst a background of randomly varying tones was measured. Increased temporal coherence (i.e., number of tones in a figure chord) resulted in higher accuracy and faster reaction times (RTs). At higher coherence levels, faster RTs were associated with better scores on a standardized speech-in-noise recognition task. Increased working memory capacity hindered SFG accuracy as the tasked progressed, whereas self-reported musicianship modulated the relationship between speech-in-noise recognition and SFG accuracy. Overall, results demonstrate that the SFG task could serve as an assessment of auditory stream segregation that is sensitive to capture individual differences in working memory capacity and musicianship.

arXiv.org

2021

Yashish M. Siriwardena, Guilhem Marion, Shihab Shamma

The recently proposed “MirrorNet,” a constrained autoencoder architecture. In this paper, the MirrorNet is applied to learn, in an unsupervised manner, the controls of a specific audio synthesizer (DIVA) to produce melodies only from their auditory spectrograms. The results demonstrate how the MirrorNet discovers the synthesizer parameters to generate the melodies that closely resemble the original and those of unseen melodies, and even determine the best set parameters to approximate renditions of complex piano melodies generated by a different synthesizer. This generalizability of the MirrorNet illustrates its potential to discover from sensory data the controls of arbitrary motor-plants such as autonomous vehicles.

arXiv.org

Guilhem Marion, Giovanni M. Di Liberto, Shihab Shamma

It is well known that the human brain is activated during musical imagery: the act of voluntarily hearing music in our mind without external stimulation. It is unclear, however, what the temporal dynamics of this activation are, as well as what musical features are precisely encoded in the neural signals. This study uses an experimental paradigm with high temporal precision to record and analyze the cortical activity during musical imagery. This study reveals that neural signals encode music acoustics and melodic expectations during both listening and imagery. Crucially, it is also found that a simple mapping based on a time-shift and a polarity inversion could robustly describe the relationship between listening and imagery signals.

Journal of Neuroscience

Giovanni M. Di Liberto, Guilhem Marion, Shihab Shamma

Music perception depends on our ability to learn and detect melodic structures. It has been suggested that our brain does so by actively predicting upcoming music notes, a process inducing instantaneous neural responses as the music confronts these expectations. Here, we studied this prediction process using EEGs recorded while participants listen to and imagine Bach melodies. Specifically, we examined neural signals during the ubiquitous musical pauses (or silent intervals) in a music stream and analyzed them in contrast to the imagery responses. We find that imagined predictive responses are routinely co-opted during ongoing music listening. These conclusions are revealed by a new paradigm using listening and imagery of naturalistic melodies.

Journal of Neuroscience

2020

Shihab Shamma, Prachi Patel, Shoutik Mukherjee, Guilhem Marion, Bahar Khalighinejad, Cong Han, Jose Herrero, Stephan Bickel, Ashesh Mehta, Nima Mesgarani

Action and perception are closely linked in many behaviors necessitating a close coordination between sensory and motor neural processes so as to achieve a well-integrated smoothly evolving task performance. To investigate the detailed nature of these sensorimotor interactions, and their role in learning and executing the skilled motor task of speaking, the authors analyzed ECoG recordings of responses in the high-γ band (70–150 Hz) in human subjects while they listened to, spoke, or silently articulated speech. They found elaborate spectrotemporally modulated neural activity projecting in both “forward” (motor-to-sensory) and “inverse” directions between the higher-auditory and motor cortical regions engaged during speaking. Furthermore, mathematical simulations demonstrate a key role for the forward projection in “learning” to control the vocal tract, beyond its commonly postulated predictive role during execution. These results therefore offer a broader view of the functional role of the ubiquitous forward projection as an important ingredient in learning, rather than just control, of skilled sensorimotor tasks.

Cerebral Cortex Communications (Oxford Academics)

2023

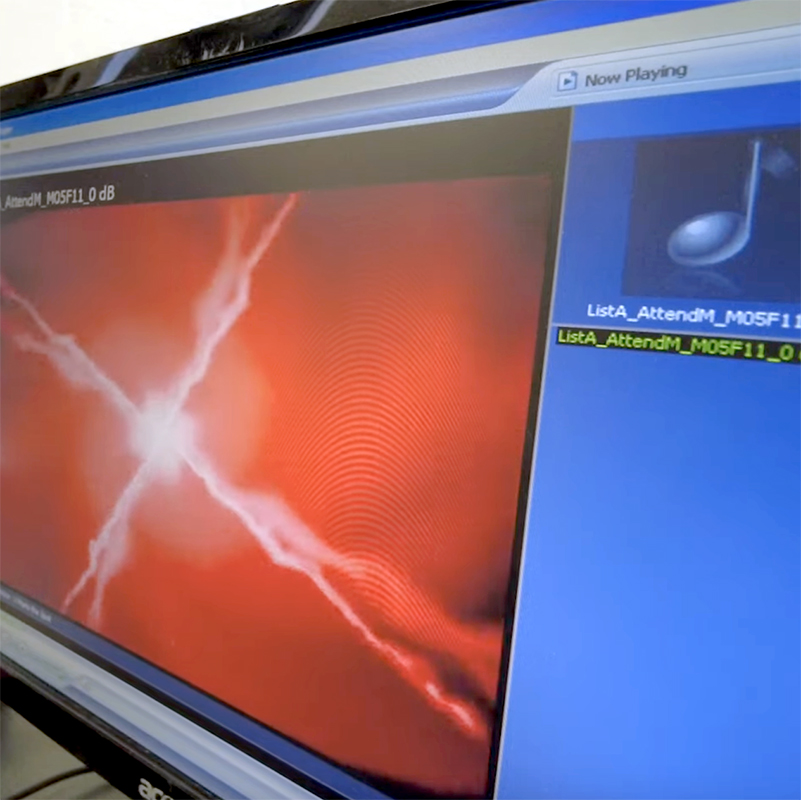

Ruwanthi Abeysekara, Christopher J. Smalt, I. M. Dushyanthi Karunathilake, Jonathan Z. Simon, Behtash Babadi

Speaker-specific attention decoding from neural recordings to suppress the acoustic background and extract a target speaker in an in-the-wild multi-speaker conversation scenario poses a cornerstone challenge for advanced hearing devices. Despite several recent advances in auditory attention decoding, most existing approaches fail to reach the real-time performance and attention decoding accuracy required by hearing aid devices. In this work, the authors aim to quantify fundamental limits on the performance of auditory attention decoding by establishing and computing the trade-off between accuracy and decision window length.

UMD Simon Lab

Behrad Soleimani, Isabella Dallasta, Proloy Das, Joshua Kulasingham, Sophia Girgenti, Jonathan Simon, Behtash Babadi, Elisabeth Marsh

This study provides supporting evidence that the neural basis of early post-stroke cognitive dysfunction occurs at the network level, and continued recovery correlates with the evolution of inter-hemispheric connectivity.

Brain Communications

Xiaoming Du, Stephanie Hare, Ann Summerfelt, Bhim M. Adhikari, Laura Garcia, Wyatt Marshall, Peng Zan, Mark Kvarta, Eric Goldwaser, Heather Bruce, Si Gao, Hemalatha Sampath, Peter Kochunov, Jonathan Z. Simon & L. Elliot Hong

Aberrant gamma frequency neural oscillations in schizophrenia have been well demonstrated using auditory steady-state responses (ASSR). However, the neural circuits underlying 40 Hz ASSR deficits in schizophrenia remain poorly understood. Sixty-six patients with schizophrenia spectrum disorders and 85 age- and gender-matched healthy controls completed one electroencephalography session measuring 40 Hz ASSR and one imaging session for resting-state functional connectivity (rsFC) assessments. The associations between the normalized power of 40 Hz ASSR and rsFC were assessed via linear regression and mediation models. Here, the authors find that rsFC among auditory, precentral, postcentral, and prefrontal cortices were positively associated with 40 Hz ASSR in patients and controls separately and in the combined sample. The mediation analysis further confirmed that the deficit of gamma band ASSR in schizophrenia was nearly fully mediated by three of the rsFC circuits between right superior temporal gyrus—left medial prefrontal cortex (MPFC), left MPFC—left postcentral gyrus (PoG), and left precentral gyrus—right PoG. Gamma-band ASSR deficits in schizophrenia may be associated with deficient circuitry level connectivity to support gamma frequency synchronization. Correcting gamma band deficits in schizophrenia may require corrective interventions to normalize these aberrant networks.

Translational Psychiatry, a Nature publication

2022

Behrad Soleimani, Proloy Das, I.M. Dushyanthi Karunathilake, Stefanie E. Kuchinsky, Jonathan Z. Simon, Behtash Babadi

Introduces the Network Localized Granger Causality (NLGC) inference paradigm, which models the source dynamics as latent sparse multivariate autoregressive processes and estimates their parameters directly from the MEG measurements, integrated with source localization, and employs the resulting parameter estimates to produce a precise statistical characterization of the detected GC links.

bioRxiv.org

Joshua Kulasingham, Jonathan Simon

The Temporal Response Function (TRF) is a linear model of neural activity time-locked to continuous stimuli, including continuous speech. TRFs based on speech envelopes typically have distinct components that have provided remarkable insights into the cortical processing of speech. However, current methods may lead to less than reliable estimates of single-subject TRF components. The authors compare two established methods, in TRF component estimation, and also propose novel estimation algorithms that utilize prior knowledge of these components, bypassing the full TRF estimation.

bioRxiv.org

2021

Christian Brodbeck, Jonathan Simon

Voice pitch carries linguistic as well as non-linguistic information. Previous studies have described cortical tracking of voice pitch in clean speech, with responses reflecting both pitch strength and pitch value. However, pitch is also a powerful cue for auditory stream segregation, especially when competing streams have pitch differing in fundamental frequency, as is the case when multiple speakers talk simultaneously. The authors investigate how cortical speech pitch tracking is affected in the presence of a second, task-irrelevant speaker.

bioRxiv.org

Marlies Gillis, Jonas Vanthornhout, Jonathan Simon, Tom Francart and Christian Brodbeck

The authors evaluate the potential of several recently proposed linguistic representations as neural markers of speech comprehension.

Journal of Neuroscience

Joshua Kulasingham, Neha Joshi, Mohsen Rezaeizadeh, Jonathan Simon

A study that shows the neural responses to arithmetic and language are especially well segregated during the cocktail party paradigm. A correlation with behavior suggests that they may be linked to successful comprehension or calculation.

Journal of Neuroscience

Stephanie Hare, Bhim Adhikari, Xiaoming Du, Laura Garcia, Heather Bruce, Peter Kochunov, Jonathan Simon, Elliot Hong

The study investigates how local and long-range functional connectivity is associated with auditory perceptual disturbances (APD) in schizophrenia.

Schizophrenia Research

Joshua Kulasingham, Neha Joshi1, Mohsen Rezaeizadeh, Jonathan Simon

Cortical processing of arithmetic and of language rely on both shared and task-specific neural mechanisms, which should also be dissociable from the particular sensory modality used to probe them. Here, spoken arithmetical and non-mathematical statements were employed to investigate neural processing of arithmetic, compared to general language processing, in an attention-modulated cocktail party paradigm.

biorxiv.org

2020

Elisabeth Marsh, Christian Brodbeck, Rafael Llinas, Dania Mallick, Joshua Kulasingham, Jonathan Simon, and Rodolfo Llinas

For the first time measurable physical evidence is provided of diminished neural processing within the brain after a stroke. It suggests that poststroke acute dysexecutive syndrome (PSADES) is the result of a global connectivity dysfunction.

Proceedings of the National Academy of Sciences of the United States of America

Christian Brodbeck, Alex Jiao, L. Elliot Hong, Jonathan Simon

Humans are remarkably skilled at listening to one speaker out of an acoustic mixture of several speech sources. Two speakers are easily segregated, even without binaural cues, but the neural mechanisms underlying this ability are not well understood. One possibility is that early cortical processing performs a spectrotemporal decomposition of the acoustic mixture, allowing the attended speech to be reconstructed via optimally weighted recombinations that discount spectrotemporal regions where sources heavily overlap. Using human magnetoencephalography (MEG) responses to a 2-talker mixture, the authors show evidence for an alternative possibility, in which early, active segregation occurs even for strongly spectrotemporally overlapping regions.

PLOS Biology

Peng Zan, Alessandro Presacco, Samira Anderson, Jonathan Simon

Aging is associated with an exaggerated representation of the speech envelope in auditory cortex. The relationship between this age-related exaggerated response and a listener’s ability to understand speech in noise remains an open question. Here, information-theory-based analysis methods are applied to magnetoencephalography recordings of human listeners, investigating their cortical responses to continuous speech, using the novel nonlinear measure of phase-locked mutual information between the speech stimuli and cortical responses. The cortex of older listeners shows an exaggerated level of mutual information, compared with younger listeners, for both attended and unattended speakers.

Journal of Neurophysiology

Peng Zan, Alessandro Presacco, Samira Anderson, Jonathan Simon

Information-theory-based analysis methods are applied to magnetoencephalography recordings of human listeners, investigating their cortical responses to continuous speech, using the novel nonlinear measure of phase-locked mutual information between the speech stimuli and cortical responses. This information-theory-based analysis provides new, and less coarse-grained, results regarding age-related change in auditory cortical speech processing, and its correlation with cognitive measures, com-pared with related linear measures.

Journal of Neurophysiology

Christian Brodbeck, Jonathan Simon

Speech processing in the human brain is grounded in non-specific auditory processing in the general mammalian brain, but relies on human-specific adaptations for processing speech and language. For this reason, many recent neurophysiological investigations of speech processing have turned to the human brain, with an emphasis on continuous speech. This article considers the substantial progress that has been made using the phenomenon of 'neural speech tracking,' in which neurophysiological responses time-lock to the rhythm of auditory (and other) features in continuous speech.

Current Opinion in Physiology

Sina Miran, Behtash Babadi, Alessandro Presacco, Jonathan Simon, Michael Fu, Steven Marcus

This research develops efficient algorithms for inferring the parameters of a general class of Gaussian mixture process noise models from noisy and limited observations, and utilize them in extracting the neural dynamics that underlie auditory processing from magnetoencephalography (MEG) data in a cocktail party setting.

PLOS Computational Biology

Proloy Das, Christian Brodbeck, Jonathan Z. Simon, Behtash Babadi

A principled modeling and estimation paradigm for MEG source analysis tailored to extracting the cortical origin of electrophysiological responses to continuous stimuli.

NeuroImage

Behrad Soleimani, Proloy Das, Joshua Kulasingham, Jonathan Z. Simon, Behtash Babadi

The authors consider the problem of determining Granger causal influences among sources that are indirectly observed through low-dimensional and noisy linear projections. They model the source dynamics as sparse vector autoregressive processes and estimate the model parameters directly from the observations, with no recourse to intermediate source localization.

54th Conference on Information Sciences and Systems