News Story

Tracing the Roots of a Supercomputer

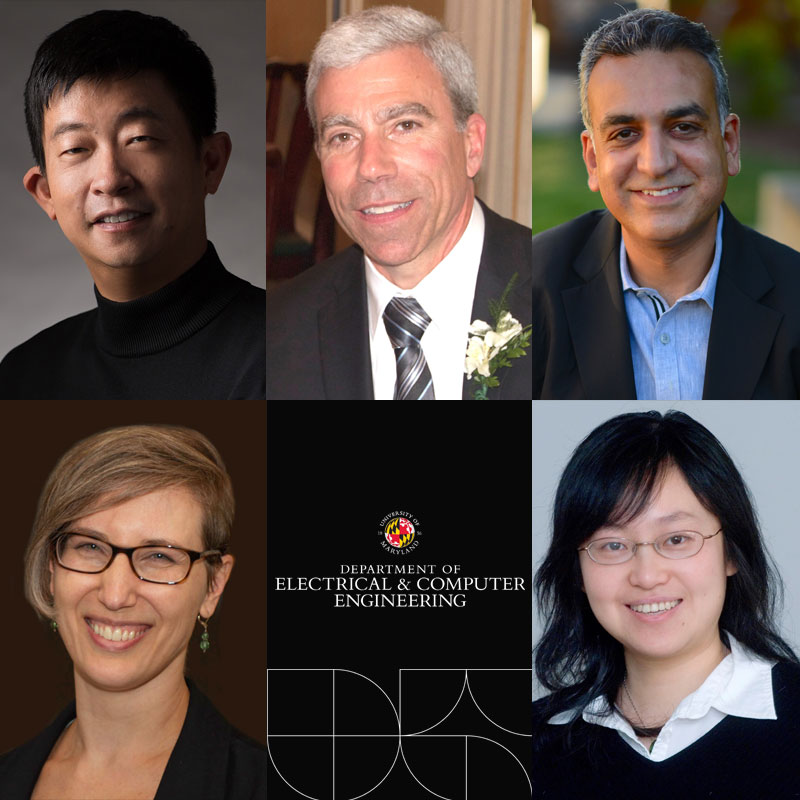

On November 9, 2022 alumnus David Bader (Ph.D. ’96, electrical engineering) was inducted into the Clark School of Engineering’s Innovation Hall of Fame— joining a small but distinguished community of inventors who use their knowledge, perseverance, innovation, and ingenuity to change how we do things in the world.

While the top 500 supercomputers operating in the world have roots in Bader’s early work to “democratize” supercomputing through the creation of the first commercial off the shelf (COTS)/open-source Linux supercomputer, he has also pioneered academic programs and research efforts in the field of data sciences.

You were practically programming for your father before you could read or write, how do you think this early experience shaped the direction of the work you would go on to do?

My father was a physical chemist and an early computational scientist, and my mother is an inorganic chemist, so they both get a lot of credit for my appreciation of the sciences and engineering.

Back in the 1970s, programming really meant the thoughtful use of resources because every computer was limited in terms of memory size, cost of operations, etc., so it set me in a direction of thinking about algorithms and how we can accelerate algorithms to run even faster.

My entry into parallel computing was in the early 1980s and I thought, how could we use multiple processors to solve the same problem faster? And what does that mean for algorithms? Up to that point, algorithms were synonymous with sequential programming. There was no concept of using multiple processors, so for me, it was a new frontier to explore how can we co-design new parallel computers and algorithms that will solve some of the most meaningful problems at hand?

For me, computing was solving problems in the physical world, whether it's coming from chemistry or biology or physics or national security. It was always about trying to solve a problem that mattered in the real world.

You frequently discuss using supercomputers and high-performance computing to solve the world’s “biggest challenges.” What challenges were you seeing in the 90s that influenced your work?

At the time, I had a couple areas of focus that I was very passionate about. One was thinking about our nation’s security and how we spread peace to everyone on the planet.

For me, understanding large data sets was important. Exploring data sets from remote sensing of the Earth, biological sequences, and social networks led the way to what we see today with big data and the massive scale of data science, high performance data analytics, and the area that I've pioneered in massive graph analytics.

This really led the way to what we see today with big data and the massive scale of data science, high performance data analytics, and the area that I've pioneered in massive graph analytics.

The other types of problems that I wanted to solve came from areas in biology and genetics. Both of my parents were natural scientists, my brother Joel was a biomedical engineer and biochemist and my twin sister, Deborah, also has her Ph.D. in genetics. For me, being a twin and having all this family work in biology and chemistry, helped create a passion.

I used multiple processors, but when I went to publish that work, I was told by many outlets, ‘Oh, computer scientists do physics and chemistry. They don't do biology.’

So I did a lot of work in parallelizing important biology applications, even for commercial use. But it wasn't until a number of years later that the academic environment caught up and realized that computational biology was an area for further exploration, and now we see a lot of use in drug discovery, drug design, in understanding disease and clinical trials patients and all of these areas are really rooted in that work.

I also looked at computer image and image processing. I wanted to be able to take, for instance, satellite imagery around the planet and understand our climate and climate change.

In fact, while at University of Maryland, I was supported by NASA and a NASA Graduate Student Fellowship and worked with the geographers at Maryland in addition to my Ph.D. advisor and postdoc advisor, Joseph JaJa and Larry Davis to take satellite imagery from decades and understand how the climate was impacting the land cover, for example what was happening to desertification of Africa, what was happening to the canopy of the rainforest, what was happening to essentially the greenness of the planet?

And that was also a passion to look at how we are being good stewards of the planet.

You’ve established two departments and five degree programs during your career, with an emphasis on data science, what do you see as the future for the field of data science?

Data science by its very nature is collaborative since data can originate from so many sources. That could be within humanities, engineering, science, computing, business, or architecture. Just about anything, so when you think about data science, you really have to understand the origins of the data and what questions you're asking of the data.

There are specializations in data science, and there are specializations in data engineering, which is how to manage and process, how to transform those data sets and so on.

I see this as a growing and emerging area, and right now we are seeing just the tip of the iceberg on the uses of data science to transform industries, to create data driven industries that can monetize data sets, that can improve operational efficiencies, that can make better decisions based on data.

That even includes policy makers that may run simulations or have digital twins of entire states or nations to understand the impact of laws that get created.

We're also really seeing this use of data exploding and areas like machine learning and AI taking center stage for being able to solve problems that we never imagined we could solve so efficiently and so quickly, and we're really at the forefront of this.

And there's jobs! One of the top job needs in the country today, if you look at the U.S. Labor Department, and the needs across multiple states, is for data scientists, those that can come out and work in not just the tech sector, but work in every company, in every industry making data useful for that organization.

What's also fascinating is that as we have access to more and more data sets, and we can now think about the power of combining these together to solve today’s global grand challenges. To me, that's just a tremendous opportunity to improve the world and to make it a better place for everyone around the world.

You’ve often mentioned that one of the reasons for your early work in developing COTS/open-source Linux supercomputers was to “democratize” supercomputers and to “improve their affordability.” Why was this such an important aspect of your work?

I'm a first generation American. My mother was a child Holocaust survivor from Europe and my dad’s parents immigrated to the United States. In the late 1970’s when I was in 5th grade, I started delivering newspapers to earn enough money to buy my own computer. In the early 1980’s I discovered parallel computing, but to get into supercomputing meant that you needed millions of dollars to buy a unique commercial system.

Democratizing supercomputers meant making them accessible to anyone in the world who wished to work on important and computationally-demanding problems.

I had a passion to harness multiple processors together to solve these problems, and I recognized early on that this would require harnessing the economics of commodity-based systems to make supercomputing ubiquitous rather than available to only elite organizations and governments, so we needed to identify technologies that would be affordable while supporting the high performance of traditional supercomputers.

Solutions to real-world grand challenges may come from anyone in the world, not just be in the places where people could afford million-dollar supercomputers.

It was very important to me to understand that we needed harness the economics of commodity-based systems in order to make supercomputing ubiquitous rather than in the hands of just a couple organizations and governments.

We needed to find technologies that would be cost effective, affordable and supply the high performance that traditional supercomputers could. Democratizing it meant that any person around the planet who had access to electricity would be able to work on important problems that mattered.

And the places where we'd find solutions would naturally come from the many communities that we have on this planet. They may not just be in the places where people could afford million-dollar supercomputers. So that was what was important to me, that everyone had access, and that it was equitable access to these types of resources.

I think we've seen that proven out today where these technologies have led to. Every top supercomputer in the world is using the inventions I created and are also influencing cloud computing that we see today.

What is going on with some of your current research?

One of my current research projects, that is supported by the National Science Foundation, is looking at the confluence of high-performance computing and data science. Many times, a data scientist has a data set that when it grows beyond what they can hold on their laptop, and they no longer can process it.

Python is the lingua franca of data scientists, and we're working on a new open-source framework called Arkoúda, the Greek word for bear. It's available in GitHub, but it's a joint project with the Department of Defense, and we are creating a framework where you can have a supercomputer in the background running an open-source compiler called Chapple that was originally developed by Cray under DARPA funding about 20 years ago.

So, this is a fully open-source software stack, and we're creating algorithms that will give an analyst—who knows just how to use Python—the ability to manipulate these massive data sets that reside in the back end on a supercomputer.

The users don't need to be a heroic programmer the way we've needed the last 30, 50 years on supercomputers. My group is also building out a module for Graph Analytics at this scale, called Arachnid that will be able to take data sets that have tens of terabytes of information in them and be able to run graph analytics as seamlessly, as easily as someone would with their python code on their laptop.

We’re very excited because this once again democratizes data science by making the tools available so that anyone who can write in Python—these days I have high school students in my research group writing Python code quite well—they can make use of a supercomputer just as easily as anyone in the world can these days.

So that excites me a lot, the research that we're pursuing here, and I think that's going to have a tremendous impact.

You frequently discuss the application of high-performance computing as a tool for addressing some of the world’s greatest challenges, and improving the world and the lives of those in it, so what do you most hope is the future of supercomputing?

I would like to see the continued leveraging of commodity technologies and miniaturization of technologies so that we could deploy supercomputers ubiquitously. These systems must integrate new architectures that combine scientific computing capabilities with new technologies for supporting data science, machine learning, and artificial intelligence.

There are so many phenomenal uses that if everyone had access to a supercomputer—maybe we will see them in our next generation of smartphones—then we would see just a tremendous amount of change when these technologies are easy-to-use and accessible by all.

That's really my dream, to make supercomputing access as simple as driving a car or reading a book or carrying your smartphone, so that everyone with a question can solve real-world grand challenge problems quite easily.

What is an aspect of your work you seldom get to discuss?

One feeling that that I've had in engineering is that we're often focused on technologies and solutions, which is a fantastic endeavor, but we often forget about people and the problems that we face in the world that I think are really important.

My family history has been one that has influenced me to really have me pursue making a difference in this world. The opportunity to be here on this planet, knowing from an early age that I wanted to make a difference, that I wanted to do something bigger than myself.

When I was a senior undergraduate in computer engineering, I needed a humanities and social sciences elective to finish out my general requirements, so a friend and I decided that we would take a course on history and religion, and in particular, a course on the history of the Holocaust. It was a very moving class for me, but it was while reading an essay for that class that overnight I decided that I would become vegan, but it was more than just a dietary selection, it really was our understanding of how we relate to people and how we relate to the planet and how we make this world a better place for everyone.

That really has been something that has been a core element of everything that I think about every day in terms of democratizing computing and data science, and solving global grand challenges.

It's really to make this world better for the generations to come, making it better, safer in every way. And I think if people stop to take a moment to think about what is important to me, that's one of the most important questions, is to understand what's important to you.

And once you know that, then everything else I think is easy in your career.

But it's also really thinking about what is it that you want to accomplish? What is it that would really be your goal in life and understand how you can make a difference whether you're a college professor like me or you are a schoolteacher or you drive for Uber, whatever it is, everyone has the ability to do that. For me that was very important. I

I’ve also found that a vibrant community has been very important throughout my career. I've always strived to have a well rounded research group on every aspect, because I find that is what provides better decisions and perspectives, and it also creates more creative thoughts because the problems that we face this planet may not look like us in particular, but looks like someone else on this planet.

So a wide range of thoughts and ideas are needed to solve these important problems, and we have to embrace that because I think that's what makes us great as a human race

Published November 28, 2022