News Story

Deep learning helps aerial robots gauge where they are

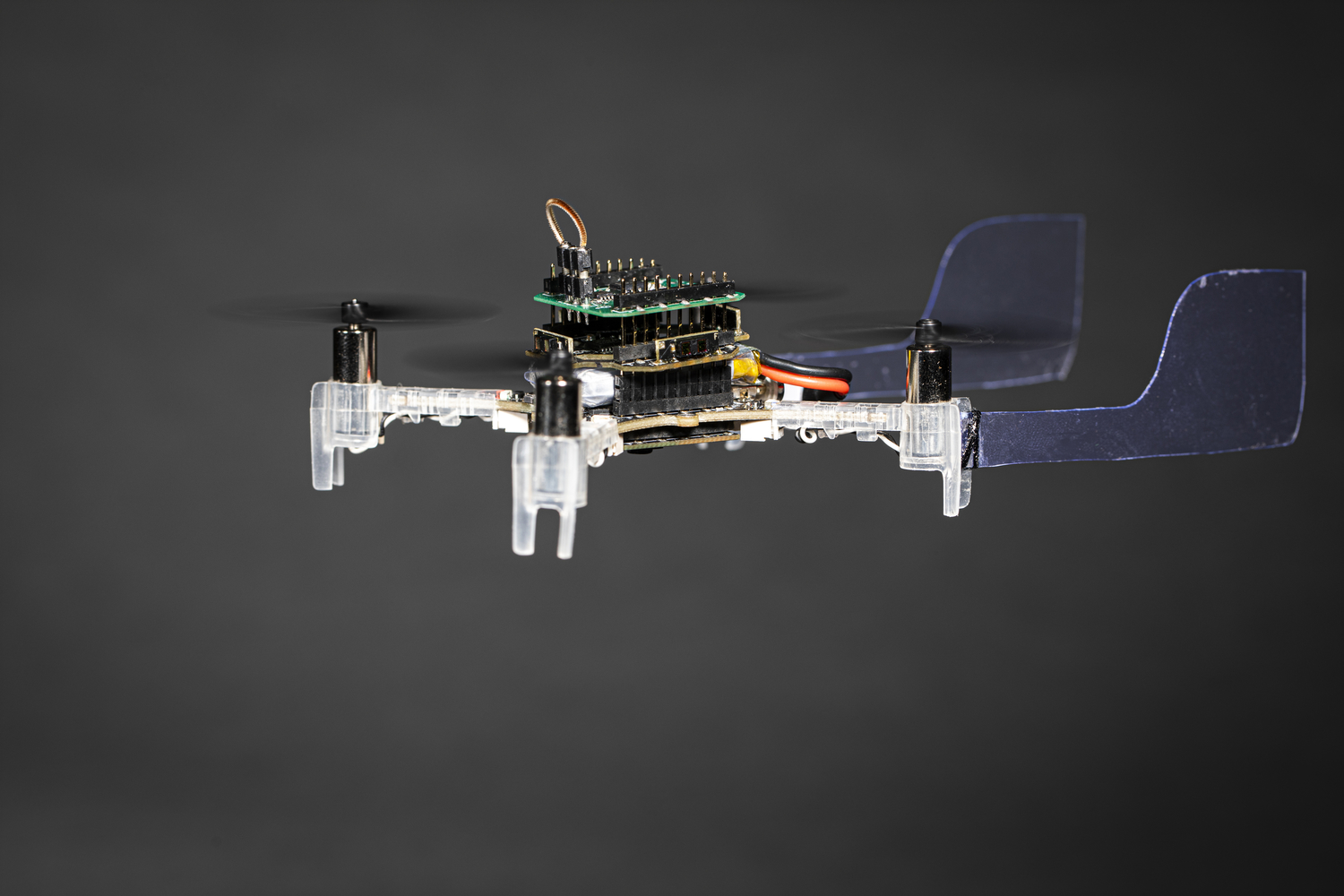

PRG Husky-360γ platform used in flight experiments. (Fig. 3 from the paper)

All robots need to be able to gauge their physical position in order to move safely through space and perform tasks. They accomplish this 3D “ego-motion” measurement using computer vision and other AI techniques that rely on information from onboard cameras and other sensors.

Small aerial robots are finding increased utility because of their safety, agility and usability in swarms. However, they have particularly tight odometry constraints: they need low latency and high robustness, but present significant challenges in size, weight, area and power constraints (SWAP). Many odometry approaches that work with larger aerial robots do not scale down. A combination of visual sensors coupled with Inertial Measurement Units (IMUs) has proven to be the best combination for aerial robots.

Recently, deep learning approaches that use compression and hardware acceleration have gained momentum. These are highly accurate, robust, and scalable algorithms that are easily adapted to different sized aerial robots. The algorithms learn to predict odometry in an end-to-end fashion, and the networks used in these approaches can be compressed to smaller size with generally a linear drop in accuracy to cater to SWAP constraints. The critical issue is that to have the same accuracy as classical approaches, they are generally computationally heavy leading to larger latencies. However, leveraging hardware acceleration and better parallelizable architectures can mitigate this problem.

In the paper, PRGFlow: Benchmarking SWAP Aware Unified Deep Visual Inertial Odometry, ISR-affiliated Professor Yiannis Aloimonos (CS/UMIACS), Associate Research Scientist Cornelia Fermüller and their students Nitin J. Sanket and Chahat Deep Singh present a simple way to estimate ego-motion/odometry on an aerial robot using deep learning combining commonly found on-board sensors: a up/down-facing camera, an altimeter source and an IMU. By utilizing simple filtering methods to estimate attitude “cheap” odometry can be obtained using attitude compensated frames as the input to a network. The authors further provide a comprehensive analysis of warping combinations, network architectures and loss functions.

All approaches were benchmarked on different commonly used hardware with different SWAP constraints for speed and accuracy which can be used as a reference manual for both researchers and practitioners. The authors also provide extensive real-flight odometry results highlighting the robustness of the approach without any fine-tuning or re-training. Utilizing deep learning when failure is often expected would most likely lead to more robust system.

The researchers are part of the University of Maryland’s Perception and Robotics Group. The research was supported by the Brin Family Foundation, the Northrop Grumman Mission Systems University Research Program, ONR under grant award N00014-17-1-2622, and the National Science Foundation under grant BCS 1824198. A Titan-Xp GPU used in the experiments was granted by NVIDIA.

Published July 6, 2020