News Story

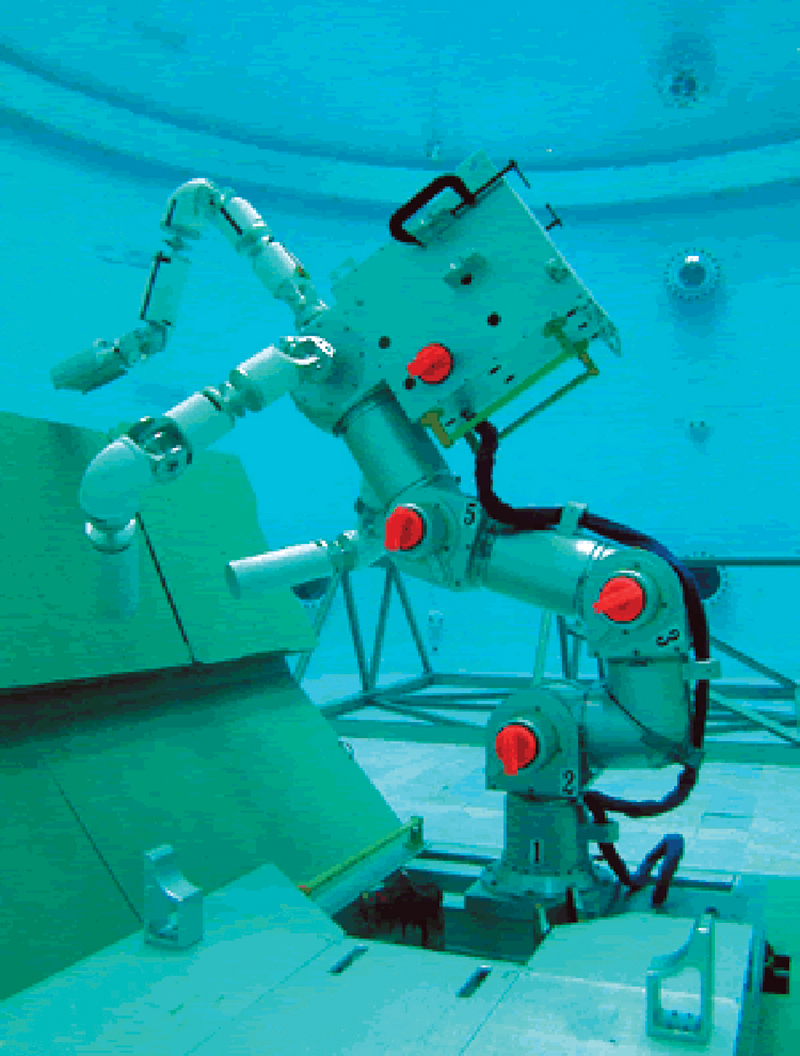

Planning and learning algorithms developed for refinement acting engine

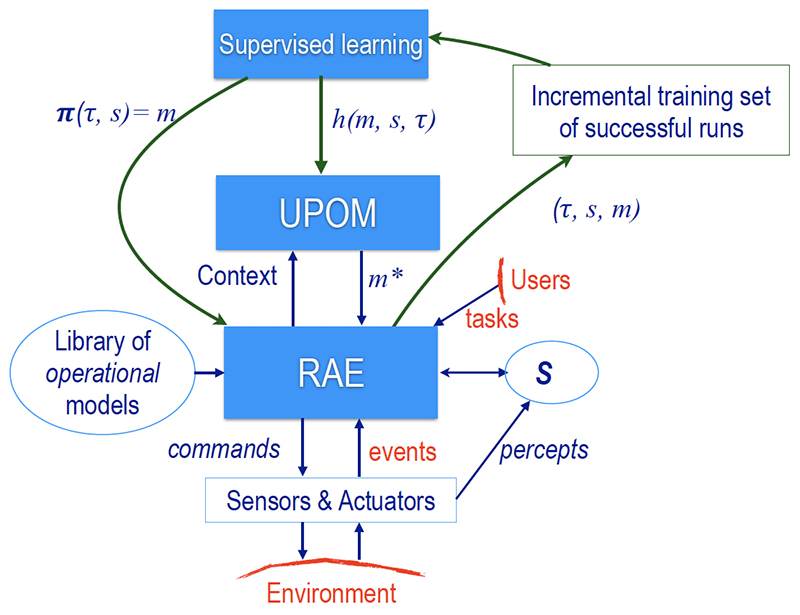

Integration of acting, planning and learning (Fig.4 from the paper)

A new paper, “Integrating acting, planning, and learning in hierarchical operational models,” continues the long research partnership of Professor Dana Nau (CS/ISR) and his students with his colleagues and co-authors Malik Ghallab, of the Laboratory for Analysis and Architecture of Systems, French National Center for Scientific Research (LAAS-CNRS), Toulouse, France; and Paolo Traverso, Director of the Center for Information and Communication Technology at Fondazione Bruno Kessler, Trento, Italy.

The paper was written by Sunandita Patra and Amit Kumar, Computer Science graduate students advised by Dana Nau; alumnus James Mason (CS B.S. 2019); Ghallab, Traverso and Nau. It has been awarded honorable mention in the best student paper category of the 2020 International Conference on Automated Planning and Scheduling (ICAPS), originally scheduled for June 2020 and recently postponed to fall 2020. The conference is sponsored by the Association for the Advancement of Artificial Intelligence (AAAI).

Nau, Ghallab and Traverso produced 2004’s book Automated Planning; the 2014 ACM Artificial Intelligence position paper, “The Actor’s View of Automated Planning and Acting”, which advocates a hierarchical organization of an actor’s deliberation functions, with online planning throughout the acting process; and Automated Planning’s 2016 sequel, Automated Planning and Acting, which proposed a Refinement Acting Engine (RAE) in chapter 3 (See ISR’s 2016 story about the book here).

In 2019, Ghallab, Nau and Traverso worked with students Patra, Mason, and Kumar to propose RAEplan, a planner for RAE that figures prominently in the current paper. This work improved RAE’s efficiency and success ratio and is appealing for its powerful representation and seamless integration of reasoning and acting.

Specifically, the paper presents new planning and learning algorithms for RAE, which uses hierarchical operational models to perform tasks in dynamically changing environments. In the new paper, a novel planning procedure called UPOM (UCT Planner for Operational Models) does a UCT-like search in the space of operational models to use a near optimal method for the task and context at hand. (Upper Confidence Tree—UCT—is a type of Monte Carlo tree search algorithm used in decision processes, most notably those involved in game play like Go, chess and contract bridge.)

The learning strategies acquire, from online acting experiences and/or simulated planning results, mapping from decision contexts to method instances as well as a heuristic function to guide UPOM. Experimental results show that UPOM and the learning strategies significantly improve RAE’s performance in four test domains along the metrics of efficiency and success ratio.

Published March 26, 2020