2023

Eadom Dessalene, Michael Maynord, Cornelia Fermüller, Yiannis Aloimonos

Motivated by Goldman’s Theory of Human Action—a framework in which action decomposes into 1) base physical movements, and 2) the context in which they occur—the authors propose a novel learning formulation for motion and context, where context is derived as the complement to motion.

2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV 2024)

Eadom Dessalene, Michael Maynord, Cornelia Fermüller, Yiannis Aloimonos

LEAP is a novel method for generating video-grounded action programs through use of a Large Language Model (LLM). These action programs represent the motoric, perceptual, and structural aspects of action, and consist of sub-actions, pre- and post-conditions, and control flows. LEAP’s action programs are centered on egocentric video and employ recent developments in LLMs both as a source for program knowledge and as an aggregator and assessor of multimodal video information.

arXiv.org

Dehao Yuan, Furong Huang, Cornelia Fermüller, Yiannis Aloimonos

Given samples of a continuous object (e.g. a function), Hyper-Dimensional Function Encoding (HDFE) produces an explicit vector representation of the given object, invariant to the sample distribution and density. Sample distribution and density invariance enables HDFE to consistently encode continuous objects regardless of their sampling, and therefore allows neural networks to receive continuous objects as inputs for machine learning tasks such as classification and regression. HDFE does not require any training and is proved to map the object into an organized embedding space, which facilitates the training of the downstream tasks. In addition, the encoding is decodable, which enables neural networks to regress continuous objects by regressing their encodings. HDFE can be used as an interface for processing continuous objects.

arXiv.org

Amir-Hossein Shahidzadeh, Seong Jong Yoo, Pavan Mantripragada, Chahat Deep Singh, Cornelia Fermüller, Yiannis Aloimonos

Tactile exploration plays a crucial role in understanding object structures for fundamental robotics tasks such as grasping and manipulation. However, efficiently exploring such objects using tactile sensors is challenging, due to the large-scale unknown environments and limited sensing coverage of these sensors. Here, the authors present AcTExplore, an active tactile exploration method driven by reinforcement learning for object reconstruction at scales that automatically explores the object surfaces in a limited number of steps. Through sufficient exploration, this algorithm incrementally collects tactile data and reconstructs 3D shapes of the objects as well, which can serve as a representation for higher-level downstream tasks. The method achieves an average of 95.97% IoU coverage on unseen YCB objects while just being trained on primitive shapes.

arXiv.org

Matthew S. Evanusa, Vaishnavi Patil, Michelle Girvan, Joel Goodman, Cornelia Fermüller, Yiannis Aloimonos

Robots are active agents that operate in dynamic scenarios with noisy sensors. Predictions based on these noisy sensor measurements often lead to errors and can be unreliable. To this end, roboticists have used fusion methods using multiple observations. Lately, neural networks have dominated the accuracy charts for percep- tion-driven predictions for robotic decision-making and often lack uncertainty metrics associated with the pre- dictions. Here, the authors present a mathematical formulation to obtain the heteroscedastic aleatoric uncertainty of any arbitrary distribution without prior knowledge about the data. The approach has no prior assumptions about the prediction labels and is agnostic to network architecture.

International Conference on Artificial Neural Networks (ICANN 2023) as compiled in International Conference on Artificial Neural Networks and Machine Learning by Springer

Nitin Sanket, Chahat Deep Singh, Cornelia Fermüller, Yiannis Aloimonos

Robots are active agents that operate in dynamic scenarios with noisy sensors. Predictions based on these noisy sensor measurements often lead to errors and can be unreliable. To this end, roboticists have used fusion methods using multiple observations. Lately, neural networks have dominated the accuracy charts for percep- tion-driven predictions for robotic decision-making and often lack uncertainty metrics associated with the pre- dictions. Here, the authors present a mathematical formulation to obtain the heteroscedastic aleatoric uncertainty of any arbitrary distribution without prior knowledge about the data. The approach has no prior assumptions about the prediction labels and is agnostic to network architecture.

Science Robotics

Chinmaya Devaraj, Cornelia Fermüller, Yiannis Aloimonos

GCN-based zero-shot learning approaches commonly use fixed input graphs representing external knowledge that usually comes from language. However, such input graphs fail to incorporate the visual domain nuances. The authors introduce a method to ground the external knowledge graph visually. The method is demonstrated on a novel concept of grouping actions according to a shared notion and shown to be of superior performance in zero-shot action recognition on two challenging human manipulation action datasets, the EPIC Kitchens dataset, and the Charades dataset. They further show that visually grounding the knowledge graph enhances the performance of GCNs when an adversarial attack corrupts the input graph.

Computer Vision Foundation workshop

Snehesh Shrestha, Ishan Tamrakar, Cornelia Fermüller, Yiannis Aloimonos

Haptic sensing can provide a new dimension to enhance people’s musical and cinematic experiences. However, designing a haptic pattern is neither intuitive nor trivial. Imagined haptic patterns tend to be different from experienced ones. As a result, researchers use simple step-curve patterns to create haptic stimul. Here, the authors designed and developed an intuitive haptic pattern designer that lets you rapidly prototype creative patterns. The simple architecture, wireless connectivity, and easy-to-program communication protocol make it modular and easy to scale. In this demo, workshop participants can select from a library of haptic patterns and design new ones. They can feel the pattern as they make changes in the user interface.

arXiv.org

Neal Anwar, Chethan Parameshwara, Cornelia Fermüller, Yiannis Aloimonos

Hyperdimensional Computing (HDC) is an emerging neuroscience-inspired framework wherein data of various modalities can be represented uniformly in high-dimensional space as long, redundant holographic vectors. When equipped with the proper Vector Symbolic Architecture (VSA) and applied to neuromorphic hardware, HDC-based networks have been demonstrated to be capable of solving complex visual tasks with substantial energy efficiency gains and increased robustness to noise when compared to standard Artificial Neural Networks (ANNs). Here, the authors present a bipolar HD encoding mechanism designed for encoding spatiotemporal data, which captures the contours of DVS-generated time surfaces created by moving objects by fitting to them local surfaces which are individually encoded into HD vectors and bundled into descriptive high-dimensional representations.

IEEE 2023 57th Annual Conference on Information Sciences and Systems (CISS)

Michael Maynord, M. Mehdi Farhangi, Cornelia Fermüller, Yiannis Aloimonos, Gary Levine, Nicholas Petrick, Berkman Sahiner, Aria Pezeshk

Proposes a cooperative labeling method that allows researchers to make use of weakly annotated medical imaging data for training a machine learning algorithm. As most clinically produced data is weakly-annotated - produced for use by humans rather than machines, and lacking information machine learning depends upon - this approach allows researchers to incorporate a wider range of clinical data and thereby increase the training set size.

Medical Physics

Snehesh Shrestha, William Sentosatio, Huiashu Peng, Cornelia Fermüller, Yiannis Aloimonos

This work introduces FEVA, a video annotation tool with streamlined interaction techniques and a dynamic interface that makes labeling tasks easy and fast. FEVA focuses on speed, accuracy, and simplicity to make annotation quick, consistent, and straightforward.

arXiv.org

2022

Hussam Amrouch, Mohsen Imani, Xun Jiao, Yiannis Aloimonos, Cornelia Fermuller, Dehao Yuan, Dongning Ma, Hamza E. Barkam, Paul Genssler, Peter Sutor

Hyperdimensional Computing (HDC) is rapidly emerging as an attractive alternative to traditional deep learning algorithms. Despite the profound success of Deep Neural Networks (DNNs) in many domains, the amount of computational power and storage that they demand during training makes deploying them in edge devices very challenging if not infeasible. This, in turn, inevitably necessitates streaming the data from the edge to the cloud which raises serious concerns when it comes to availability, scalability, security, and privacy. Further, the nature of data that edge devices often receive from sensors is inherently noisy. However, DNN algorithms are very sensitive to noise, which makes accomplishing the required learning tasks with high accuracy immensely difficult. In this paper, we aim at providing a comprehensive overview of the latest advances in HDC.

2022 International Conference on Hardware/Software Codesign and System Synthesis (CODES+ISSS)

Chahat Deep Singh, Riya Kumari, Cornelia Fermüller, Nitin J. Sanket, Yiannis Aloimonos

WorldGen is an open-source framework to autonomously generate countless structured and unstructured 3D photorealistic scenes such as city view, object collection, and object fragmentation along with its rich ground truth annotation data. WorldGen being a generative model, the user has full access and control to features such as texture, object structure, motion, camera and lens properties for better generalizability by diminishing the data bias in the network. The authors demonstrate the effectiveness of WorldGen by presenting an evaluation on deep optical flow. They hope such a tool can open doors for future research in a myriad of domains related to robotics and computer vision by reducing manual labor and the cost of acquiring rich and high-quality data.

arXiv.org

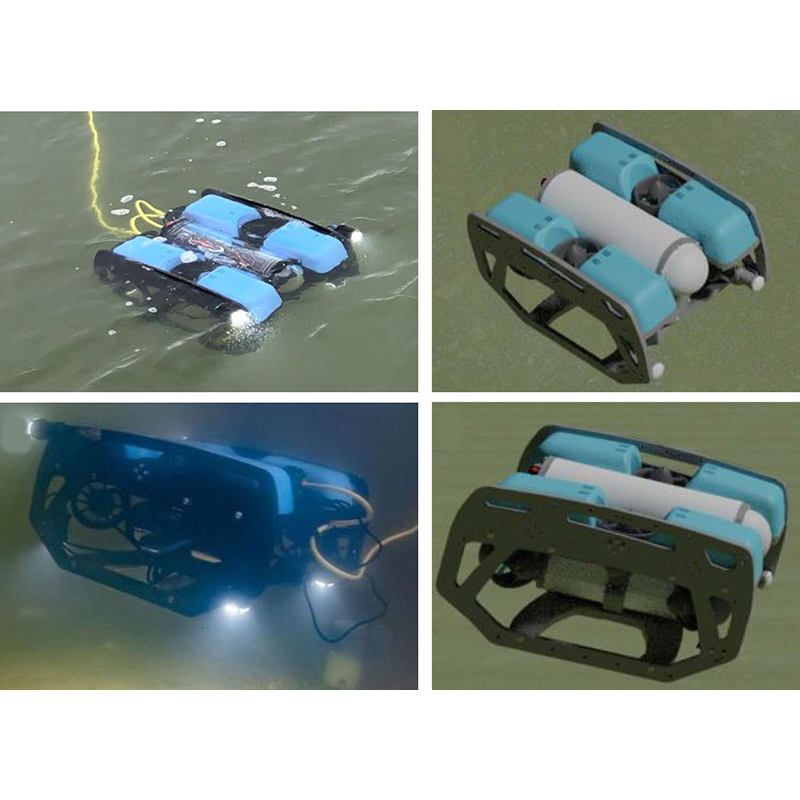

Xiaomin Lin, Nitin J. Sanket, Nare Karapetyan, Yiannis Aloimonos

A new way to mathematically model oysters and render images of oysters in simulation. This new method can boost detection performance with minimal real data, especially when used in conjunction with underwater robots.

arXiv.org

Peter Sutor, Dehao Yuan, Douglas Summers-Stay, Cornelia Fermüller, Yiannis Aloimonos

The authors explore the notion of using binary hypervectors to directly encode the final, classifying output signals of neural networks in order to fuse differing networks together at the symbolic level.

arXiv.org

Matthew Evanusa, Snehesh Shrestha, Vaishnavi Patil, Cornelia Fermüller, Michelle Girvan & Yiannis Aloimonos

Echo State Networks are a class of recurrent neural networks that can learn to regress on or classify sequential data by keeping the recurrent component random and training only on a set of readout weights, which is of interest to the current edge computing and neuromorphic community. However, they have struggled to perform well with regression and classification tasks and therefore, could not compete in performance with traditional RNNs, such as LSTM and GRU networks. To address this limitation, the authors have developed a hybrid network called Parallelized Deep Readout Echo State Network that combines the deep learning readout with a fast random recurrent component, with multiple ESNs computing in parallel.

SN Computer Science (Springer)

2021

Behzad Sadrfaridpour, Yiannis Aloimonos, Miao Yu, Yang Tao, Donald Webster

To test the idea that advancements in robotics and artificial intelligence offer the potential to improve the monitoring of oyster beds, the researchers prepared a remote operated underwater vehicle (ROV) with a camera and filmed in the Chesapeake Bay. They then used these videos to train convolutional neural networks (CNNs) to count oysters and track them in consecutive image frames so they are not identified multiple times.

arXiv.org

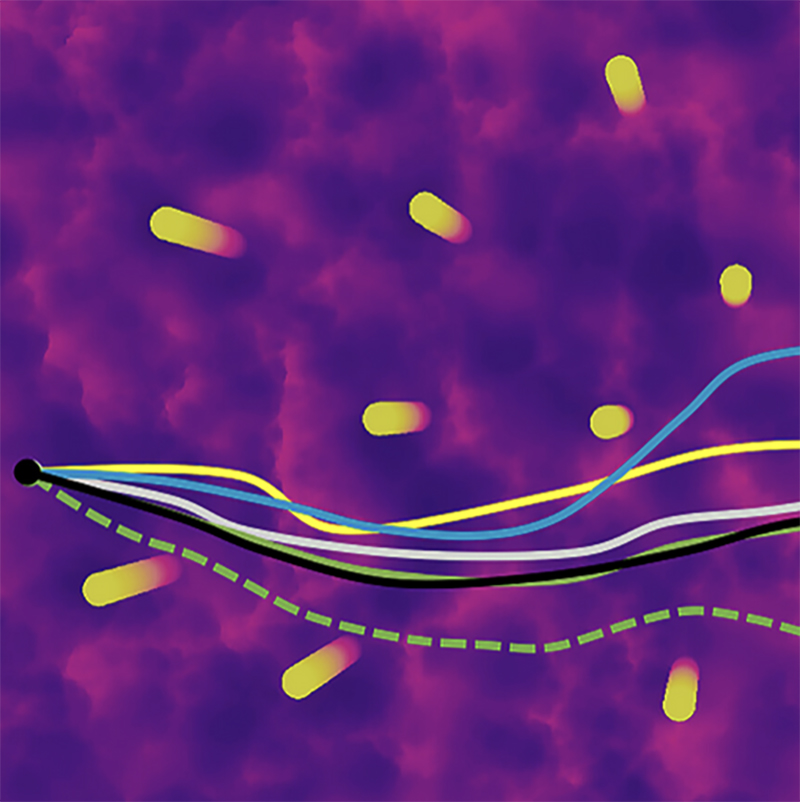

Chethan M. Parameshwara, Simin Li, Cornelia Fermüller, Nitin J. Sanket, Matthew S. Evanusa, Yiannis Aloimonos

The researchers propose SpikeMS, the first deep encoder-decoder SNN architecture for the real-world large-scale problem of motion segmentation using the event-based DVS camera as input.

arXiv.org

2020

Matthew Evanusa, Cornelia Fermüller, Yiannis Aloimonos

The researchers present a novel Deep Reservoir Network for time series prediction and classification that learns through non-differentiable hidden reservoir layers using a biologically-inspired back propagation alternative. This alternative, called Direct Feedback Alignment, resembles global dopamine signal broadcasting in the brain. The researchers demonstrate its efficacy on two real-world multidimensional time series datasets.

arXiv.org

Matthew Evanusa, Cornelia Fermüller, Yiannis Aloimonos

The researchers show that a large, deep layered spiking neural network with dynamical, chaotic activity mimicking the mammalian cortex with biologically-inspired learning rules, such as STDP, is capable of encoding information from temporal data.

arXiv.org

Matthew Evanusa, Snehesh Shrestha, Michelle Girvan, Cornelia Fermüller, Yiannis Aloimonos

Demonstrates the use of a backpropagation hybrid mechanism for parallel reservoir computingwith a meta ring structure and its application on a real-world gesture recognition dataset. This mechanism can be used as an alternative to state of the art recurrent neural networks, LSTMs and GRUs.

arXiv.org

Eadom Dessalene, Michael Maynord, Chinmaya Devaraj, Cornelia Fermüller, Yiannis Aloimonos

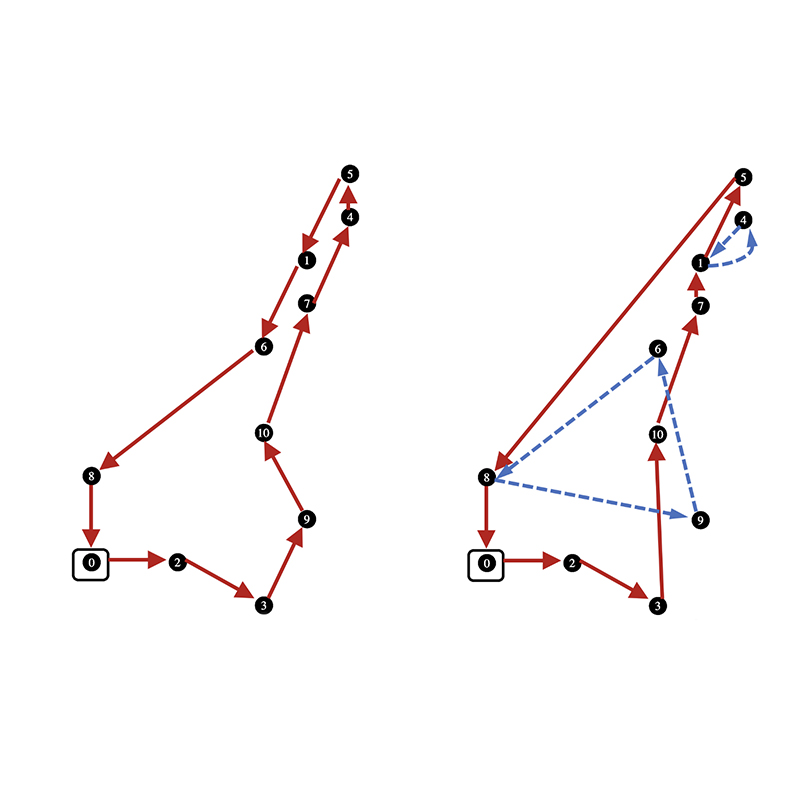

Introduces Egocentric Object Manipulation Graphs (Ego-OMG):a novel representation for activity modeling and anticipation of near future actions.

arXiv.org

Anton Mitrokhin, Peter Sutor, Douglas Summers-Stay, Cornelia Fermüller, Yiannis Aloimonos

By using hashing neural networks to produce binary vector representations of images, the authors show how hyperdimensional vectors can be constructed such that vector-symbolic inference arises naturally out of their output.

Frontiers in Robotics and AI

Anton Mitrokhin, Zhiyuan Hua, Cornelia Fermüller, Yiannis Aloimonos

Presents a Graph Convolutional neural network for the task of scene motion segmentation by a moving camera. Describes spatial and temporal features of event clouds, which provide cues for motion tracking and segmentation.

Computer Vision Foundation

Chethan M. Parameshwara, Nitin J. Sanket, Arjun Gupta, Cornelia Fermüller, Yiannis Aloimonos

A solution to multi-object motion segmentation using a combination of classical optimization methods along with deep learning and does not require prior knowledge of the 3D motion and the number and structure of objects.

arXiv.org

John Kanu, Eadom Dessalene, Xiaomin Lin, Cornelia Fermüller, Yiannis Aloimonos

A novel robotic agent framework for learning to perform temporally extended tasks using spatial reasoning in a deep reinforcement learning framework, by sequentially imagining visual goals and choosing appropriate actions to fulfill imagined goals.

arXiv.org

1996

Cornelia Fermüller, Yiannis Aloimonos

If 3D rigid motion can be correctly estimated from image sequences, the structure of the scene can be correctly derived using the equations for image formation. However, an error in the estimation of 3D motion will result in the computation of a distorted version of the scene structure. Of computational interest are these regions in space where the distortions are such that the depths become negative, because in order for the scene to be visible it has to lie in front of the image, and thus the corresponding depth estimates have to be positive. The stability analysis for the structure from motion problem presented in this paper investigates the optimal relationship between the errors in the estimated translational and rotational parameters of a rigid motion that results in the estimation of a minimum number of negative depth values. The input used is the value of the flow along some direction, which is more general than optic flow or correspondence. For a planar retina it is shown that the optimal configuration is achieved when the projections of the translational and rotational errors on the image plane are perpendicular. Furthermore, the projection of the actual and the estimated translation lie on a line through the center. For a spherical retina, given a rotational error, the optimal translation is the correct one; given a translational error, the optimal rotational error depends both in direction and value on the actual and estimated translation as well as the scene in view. The proofs, besides illuminating the confounding of translation and rotation in structure from motion, have an important application to ecological optics. The same analysis provides a computational explanation of why it is easier to estimate self-motion in the case of a spherical retina and why shape can be estimated easily in the case of a planar retina, thus suggesting that nature’s design of compound eyes (or panoramic vision) for flying systems and camera-type eyes for primates (and other systems that perform manipulation) is optimal.

International Journal of Computer Vision

2020

Maria Coelho, Mark Austin, Shivam Mishra, Mark Blackburn

Due to remarkable advances in computer, communications and sensing technologies over the past three decades,large-scale urban systems are now far more heterogeneous and automated than their predecessors. They may, in fact, be connected to other types of systems in completely new ways. These characteristics make the tasks of system design, analysis and integration of multi-disciplinary concerns much more difficult than in the past. We believe these challenges can be addressed by teaching machines to understand urban networks. This paper explores opportunities for using a recently developed graph autoencoding approach to encode the structure and associated network attributes as low-dimensional vectors. We exercise the proposed approach on a problem involving identification of leaks in urban water distribution systems.

IARIA International Journal on Advances in Networks and Services

2023

Armin Lederer, Erfaun Noorani, John Baras, Sandra Hirche

While the focus of inhibitory control has been on risk-neutral formulations, human studies have shown a tight link between response inhibition and risk attitude. Inspired by this insight, the authors propose a flexible, risk-sensitive method for inhibitory control. Our method is based on a risk-aware condition for value functions, which guarantees the satisfaction of state constraints.

arXiv.org

Erfaun Noorani, Christos Mavridis, John Baras

Incorporating risk in the decision-making process has been shown to lead to significant performance improvement in optimal control and reinforcement learning algorithms. Here, the authors construct a temporal-difference risk-sensitive reinforcement learning algorithm using the exponential criteria commonly used in risk-sensitive control. The proposed method resembles an actor-critic architecture with the ‘actor’ implementing a policy gradient algorithm based on the exponential of the reward-to-go, which is estimated by the ‘critic.’ The novelty of the update rule of the ‘critic’ lies in the use of a modified objective function that corresponds to the underlying multiplicative Bellman’s equation.

2023 American Control Conference

2022

Erfaun Noorani, Christos Mavridis, John Baras

Risk-sensitive reinforcement learning algorithms have been studied to introduce robustness and sample efficiency, and lead to better real-life performance. Here, the authors introduce new model-free risk-sensitive reinforcement learning algorithms as variations of widely-used Policy Gradient algorithms with similar implementation properties.

arXiv.org

Christos Mavridis, John Baras

Hierarchical learning algorithms that gradually approximate a solution to a data-driven optimization problem are essential to decision-making systems, especially under limitations on time and computational resources. In this study, the authors introduce a general-purpose hierarchical learning architecture based on the progressive partitioning of a possibly multi-resolution data space. The optimal partition is gradually approximated by solving a sequence of optimization sub-problems that yield a sequence of partitions with increasing number of subsets. The authors show that the solution of each optimization problem can be estimated online using gradient-free stochastic approximation updates.

arXiv.org

Erfaun Noorani, John Baras

Trust facilitates collaboration and coordination in teams and is paramount to achieving optimality in the absence of direct communication and formal coordination devices. The authors investigate the influence of agents' risk-attitudes on trust and the emergence of coordination in multi-agent environments. They consider Independent Risk-sensitive Policy Gradient, Risk-sensitive REINFORCE, RL-agents in repeated 2-agent coordination games. They suggest that risk-sensitive agents could achieve better results in multi-agent task environments.

2022 European Control Conference (ECC)

Christos Mavridis, Erfaun Noorani, John Baras

Prototype-based learning methods have been extensively studied as fast, recursive, data-driven, interpretable, and robust learning algorithms. The authors study the effect of entropy regularization in prototype-based learning regarding (i) robustness with respect to the dataset and the initial conditions, and (ii) the generalization properties of the learned representation. A duality relationship, with respect to a Legendre-type transform, between free energy and Kulback-Leibler divergence measures, is used to show that entropy-regularized prototype-based learning is connected to exponential objectives associated with risk-sensitive learning.

2022 30th Mediterranean Conference on Control and Automation (MED)

Christos Mavridis, George Kontudis, John Baras

Here, the authors introduce a sparse Gaussian process regression model whose covariance function is parameterized by the locations of a progressively growing set of pseudo-inputs generated by an online deterministic annealing optimization algorithm. This is an active learning approach, which, in contrast to most existing works, can modify already selected pseudo-inputs and is trained with recursive, gradient-free updates.

61st IEEE Conference on Decision and Control (2022)

Christos Mavridis, John Baras

The authors introduce a learning model designed to meet the needs of applications in which computational resources are limited, and robustness and interpretability are prioritized.

arXiv.org

Anousheh Gholami, Nariman Torkzaban, John Baras

Federated learning (FL) has received significant attention from both academia and industry, as an emerging paradigm for building machine learning models in a communication-efficient and privacy preserving manner. It enables potentially a massive number of resource constrained agents (e.g. mobile devices and IoT devices) to train a model by a repeated process of local training on agents and centralized model aggregation on a central server. The paper proposes trust as a metric to measure the trustworthiness of the FL agents and thereby enhance the security of the FL training.

2022 IEEE 19th Annual Consumer Communications & Networking Conference (CCNC)

2021

Christos Mavridis, John Baras

Principles from mathematics, system theory, and optimization are used to investigate the structure of a data-agnostic learning architecture that resembles the “one learning algorithm” believed to exist in the visual and auditory cortices of the human brain. The authors' approach consists of a closed-loop system with (i) a multi-resolution analysis pre-processor, (ii) a group-invariant feature extractor, and (iii) a progressive knowledge-based learning module, along with multi-resolution feedback loops that are used for learning.

arXiv.org

Nilesh Suriyarachchi, John Baras

The communication and sensing capabilities of modern connected autonomous vehicles (CAVs) will allow new approaches in control to help solve the problem of stop-and-go waves in highway networks. The paper introduces a communication-based cooperative control method for CAVs in multi-lane highways in a mixed traffic setting. Each vehicle is able to take proactive control actions. This is an improvement over existing reactive methods which rely on shock waves already being present. In addition, the new method’s performance is independent of the highway structure; the algorithm performs identically on ring roads like “beltways” and straight roads. The method allows for proactive control application and exhibits good shock wave dissipation performance even when only a few CAVs are present amongst conventional vehicles. The results were verified on a three-lane circular highway loop using realistic traffic simulation software.

IEEE 94th Vehicular Technology Conference (Fall 2021)

Nilesh Suriyarachchi, Faizan Tariq, Christos Mavridis, John Baras

Highway on-ramp merge junctions remain a major bottleneck in transportation networks. However, with the introduction of Connected Autonomous Vehicles (CAVs) with advanced sensing and communication capabilities modern algorithms can capitalize on the cooperation between vehicles. This paper enhances highway merging efficiency by optimally coordinating CAVs in order to maximize the flow of vehicles while satisfying all safety constraints.

2021 IEEE International Intelligent Transportation Systems Conference (ITSC)

Christos Mavridis, John Baras

An online prototype-based learning algorithm for clustering and classification, based on the principles of deterministic annealing.

arXiv.org

2020

Fatemeh Alimardani, Nilesh Suriyarachchi, Faizan Tariq, John Baras

Explores the integration of two of the most common traffic management strategies, namely, ramp metering and route guidance, into existing highway networks with human-driven vehicles.

Chapter in the forthcoming book, Transportation Systems for Smart, Sustainable, Inclusive and Secure Cities

Christos Mavridis, John Baras

The researchers investigate the convergence properties of stochastic vector quantization (VQ) and its supervised counterpart, Learning Vector Quantization (LVQ), using Bregman divergences. We employ the theory of stochastic approximation to study the conditions on the initialization and the Bregman divergence generating functions, under which,the algorithms converge to desired configurations. These results formally support the use of Bregman divergences, such as the Kullback-Leibler divergence, in vector quantization algorithms.

johnbaras.com

Aneesh Raghavan, John Baras

This paper pertains to stochastic multi-agent decision-making problems. The authors revisit the concepts of event-state-operation-structure and relationship of incompatibility from literature, and use them as a tool to study the algebraic structure of a set of events. They consider a multi-agent hypothesis testing problem and show that the set of events forms an ortholattice. They then consider the binary hypothesis testing problem wth finite observation space.

arXiv.org

Aneesh Raghavan, John Baras

This paper pertains to hypothesis testing problems, specifically the problem of collaborative binary hypothesis testing.

arXiv.org

Ion Matei, Johan de Kleer, Christoforos Somarakis, Rahul Rai, John Baras

To understand changes in physical systems and facilitate decisions, explaining how model predictions are made is crucial. In this paper the authors use model-based interpretability, where models of physical systems are constructed by composing basic constructs that explain locally how energy is exchanged and transformed.

arXiv.org

2019

Mohammad Mamduhi, Karl Johansson, Ehsan Hashemi, John Baras

This paper proposes an event-triggered, add-on safety mechanism in a networked vehicular system that can adjust control parameters for timely braking while maintaining maneuverability.

arXiv.org

2022

Tianchen Liu, Nikhil Chopra, Jayesh Samtani

Many strawberry growers in some areas of the United States rely on customers to pick the fruits during the peak harvest months. Unfavorable weather conditions such as high humidity and excessive rainfall can quickly promote fruit rot and diseases. This study establishes an elementary farm information system to demonstrate timely information on the farm and fruit conditions (ripe, unripe) to the growers. The information system processes a video clip or a sequence of images from a camera to provide a map which can be viewed to estimate quantities of strawberries at different stages of ripeness. The farm map is built by state-of-the-art vision-based simultaneous localization and mapping (SLAM) techniques, which can generate the map and track the motion trajectory using image features. It can help farm labor direct traffic to specific strawberry locations within a farm where fruits need to be picked, or where berries need to be removed. The obtained system can help reduce farm revenue loss and promote sustainable crop production.

Proceedings of the 2022 Biology and Life Sciences Forum

2021

Kushal Chakrabarti, Nikhil Chopra

Accelerated gradient-based methods are being extensively used for solving non-convex machine learning problems, especially when the data points are abundant or the available data is distributed across several agents. Two of the prominent accelerated gradient algorithms are AdaGrad and Adam. AdaGrad is the simplest accelerated gradient method, particularly effective for sparse data. Adam has been shown to perform favorably in deep learning problems compared to other methods. Here the authors propose a new fast optimizer, Generalized AdaGrad (G-AdaGrad), for accelerating the solution of potentially non-convex machine learning problems.

arXiv.org

2020

Kushal Chakrabarti, Nirupam Gupta, Nikhil Chopra

This paper considers the multi-agent linear least-squares problem in a server-agent network. The system comprises multiple agents, each having a set of local data points, that are connected to a server. The goal for the agents is to compute a linear mathematical model that optimally fits the collective data points held by all the agents, without sharing their individual local data points. The paper proposes an iterative pre-conditioning technique that mitigates the deleterious effect of the conditioning of data points on the rate of convergence of the gradient-descent method.

arXiv.org

2023

Rance Cleaveland, Jeroen J. A. Keiren, Peter Fontana

The authors establish relative expressiveness results for several modal mu-calculi interpreted over timed automata. These mu-calculi combine modalities for expressing passage of (real) time with a general framework for defining formulas recursively; several variants have been proposed in the literature. We show that one logic, which we call Lrel ν,μ, is strictly more expressive than the other mu-calculi considered. It is also more expressive than the temporal logic TCTL, while the other mu-calculi are incomparable with TCTL in the setting of general timed automata.

arXiv.org

2022

Jeroen J. A. Keiren, Rance Cleaveland

This paper revisits soundness and completeness of proof systems for proving that sets of states in infinite-state labeled transition systems satisfy formulas in the modal mu-calculus. The authors' results rely on novel results in lattice theory, which give constructive characterizations of both greatest and least fixpoints of monotonic functions over complete lattices. They show how these results may be used to reconstruct the sound and complete tableau method for this problem due to Bradfield and Stirling. They also show how the flexibility of their lattice-theoretic basis simplifies reasoning about tableau-based proof strategies for alternative classes of systems. In particular, the authors extend the modal mu-calculus with timed modalities, and prove that the resulting tableaux method is sound and complete for timed transition systems.

arXiv.org

2020

Samuel Huang, Rance Cleaveland

This paper describes a technique for inferring temporal-logic properties for sets of finite data streams. Such data streams arise in many domains, including server logs, program testing, and financial and marketing data; temporal-logic formulas that are satisfied by all data streams in a set can provide insight into the underlying dynamics of the system generating these streams. The authors' approach makes use of so-called Linear Temporal Logic (LTL) queries, which are LTL formulas containing a missing subformula and interpreted over finite data streams. Solving such a query involves computing a subformula that can be inserted into the query so that the resulting grounded formula is satisfied by all data streams in the set. The paper describes an automaton-driven approach to solving this query-checking problem and demonstrates a working implementation via a pilot study.

arXiv.org

Peter Fontana, Rance Cleaveland

This report contains the descriptions of the timed automata (models) and the prop-erties (specifications) that are used as the “benchmark examples in Data structure choices for on-the-fly model checking of real-time systems” and “The power of proofs: New algorithms for timed automata model checking.” The four models from those sources are: CSMA, FISCHER, LEADER, and GRC. Additionally we include in this re-port two additional models: FDDI and PATHOS. These six models are often used to benchmark timed automata model checker speed throughout timed automata model checking papers.

arXiv.org

Rance Cleaveland

This paper shows how the use of Structural Operational Semantics (SOS) inthe style popularized by the process-algebra community can lead to a more succinct and useful construction for building finite automata from regular expressions.

arXiv.org

2022

Ahmed Adel Attia, Carol Espy-Wilson

A deep learning-based approach using Masked Autoencoders to accurately reconstruct the mistracked articulatory recordings for 41 out of 47 speakers of the XRMB dataset. (The University of Wisconsin X-Ray Microbeam (XRMB) dataset is one of various datasets that provide articulatory recordings synced with audio recordings.) The authors' model is able to reconstruct articulatory trajectories that closely match ground truth, even when three out of eight articulators are mistracked, and retrieve 3.28 out of 3.4 hours of previously unusable recordings.

arXiv.org

Deanna Kelly, Glen Coppersmith, John Dickerson, Carol Espy-Wilson, Hanna Michel, Philip Resnik, Carol Espy-Wilson

Machine learning approaches to mental health face a challenging tension between scalability and validity. Tools are needed to help predict symptoms, but important uncertainties remain. How can we be confident that remote data surveillance reflects an individual’s true clinical condition? How do we obtain such data at a large scale for machine learning techniques? The authors present work aimed at addressing these gaps.

Biological Psychiatry

Yashish Maduwantha Siriwardena, Ganesh Sivaraman, Carol Espy-Wilson

Multi-task learning (MTL) frameworks have proven to be effective in diverse speech related tasks like automatic speech recognition (ASR) and speech emotion recognition. This paper proposes a MTL framework to perform acoustic-to-articulatory speech inversion by simultaneously learning an acoustic to phoneme mapping as a shared task.

arXiv.org

Rahil Parikh, Ilya Kavalerov, Carol Espy-Wilson, Shihab Shamma

Recent advancements in deep learning have led to drastic improvements in speech segregation models. Despite their success and growing applicability, few efforts have been made to analyze the underlying principles that these networks learn to perform segregation. The authors analyze the role of harmonicity on two state-of-the-art Deep Neural Networks (DNN)-based models- Conv-TasNet and DPT-Net.

arXiv.org

Nadee Seneviratne, Carol Espy-Wilson

The authors develop a multimodal depression classification system using articulatory coordination features extracted from vocal tract variables and text transcriptions obtained from an automatic speech recognition tool that yields improvements of area under the receiver operating characteristics curve compared to unimodal classifiers.

arXiv.org

2021

Yashish Maduwantha Siriwardena, Chris Kitchen, Deanna L. Kelly, Carol Espy-Wilson

The authors investigate speech articulatory coordination in schizophrenia subjects exhibiting strong positive symptoms (e.g. hallucinations and delusions), using two distinct channel-delay correlation methods. They show that the schizophrenic subjects with strong positive symptoms and who are markedly ill pose more complex articulatory coordination patterns in facial and speech gestures than what is observed in healthy subjects.

arXiv.org; Proceedings of the 2021 International Conference on Multimodal Interaction (ICMI ’21)

Carol Espy-Wilson

Dr. Espy-Wilson discusses a speech inversion system her group has developed that maps the acoustic signal to vocal tract variables (TVs). The trajectories of the TVs show the timing and spatial movement of speech gestures. She explains how her group uses machine learning techniques to compute articulatory coordination features (ACFs) from the TVs. The ACFs serve as an input into a deep learning model for mental health classification. Espy-Wilson also illustrates the key acoustic differences between speech produced by subjects when they are mentally ill relative to when they are in remission and relative to healthy controls. The ultimate goal of this research is the development of a technology (perhaps an app) for patients that can help them, their therapists and caregivers monitor their mental health status between therapy sessions.

Keynote speech at the 2021 Acoustical Society of America Annual Meeting, June 8, 2021

View a press release from the Acoustical Society of America about this speech

Nadee Seneviratne, Carol Espy-Wilson

The paper proposes a new multi-stage architecture trained on vocal tract variable (TV)-based articulatory coordination features (ACFs) for depression severity classification which clearly outperforms the baseline models. The authors establish that the robustness of ACFs based on TVs holds beyond mere detection of depression and even in severity level classification. This work can be extended to develop a multi-modal system that can take advantage of textual information obtained through Automatic Speech Recognition tools. Linguistic features can reveal important information regarding the verbal content of a depressed patient relating to their mental health condition.

arXiv.org; accepted for Interspeech2021, Aug. 30-Sept. 3, 2021

Yashish Maduwantha Siriwardena, Chris Kitchen, Deanna L. Kelly, Carol Espy-Wilson

This study, conducted with AIM-HI funding, investigates speech articulatory coordination in schizophrenia subjects exhibiting strong positive symptoms (e.g.hallucinations and delusions), using a time delay embedded correlation analysis. It finds a distinction between healthy and schizophrenia subjects in neuromotor coordination in speech.

ResearchGate.net

2020

Saurabh Sahu, Rahul Gupta, Carol Espy-Wilson

Implements three auto-encoder and GAN based models to synthetically generate higher dimensional feature vectors useful for speech emotion recognition from a simpler prior distribution pz.

IEEE Transactions on Affective Computing

2019

Saurabh Sahu, Vikramjit Mitra, Nadee Seneviratne, Carol Espy-Wilson

The paper leverages multi-modal learning and automated speech recognition (ASR) systems toward building a speech-only emotion recognition model.

Interspeech 2019

2023

Eadom Dessalene, Michael Maynord, Cornelia Fermüller, Yiannis Aloimonos

Motivated by Goldman’s Theory of Human Action—a framework in which action decomposes into 1) base physical movements, and 2) the context in which they occur—the authors propose a novel learning formulation for motion and context, where context is derived as the complement to motion.

2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV 2024)

Eadom Dessalene, Michael Maynord, Cornelia Fermüller, Yiannis Aloimonos

LEAP is a novel method for generating video-grounded action programs through use of a Large Language Model (LLM). These action programs represent the motoric, perceptual, and structural aspects of action, and consist of sub-actions, pre- and post-conditions, and control flows. LEAP’s action programs are centered on egocentric video and employ recent developments in LLMs both as a source for program knowledge and as an aggregator and assessor of multimodal video information.

arXiv.org

Dehao Yuan, Furong Huang, Cornelia Fermüller, Yiannis Aloimonos

Given samples of a continuous object (e.g. a function), Hyper-Dimensional Function Encoding (HDFE) produces an explicit vector representation of the given object, invariant to the sample distribution and density. Sample distribution and density invariance enables HDFE to consistently encode continuous objects regardless of their sampling, and therefore allows neural networks to receive continuous objects as inputs for machine learning tasks such as classification and regression. HDFE does not require any training and is proved to map the object into an organized embedding space, which facilitates the training of the downstream tasks. In addition, the encoding is decodable, which enables neural networks to regress continuous objects by regressing their encodings. HDFE can be used as an interface for processing continuous objects.

arXiv.org

Amir-Hossein Shahidzadeh, Seong Jong Yoo, Pavan Mantripragada, Chahat Deep Singh, Cornelia Fermüller, Yiannis Aloimonos

Tactile exploration plays a crucial role in understanding object structures for fundamental robotics tasks such as grasping and manipulation. However, efficiently exploring such objects using tactile sensors is challenging, due to the large-scale unknown environments and limited sensing coverage of these sensors. Here, the authors present AcTExplore, an active tactile exploration method driven by reinforcement learning for object reconstruction at scales that automatically explores the object surfaces in a limited number of steps. Through sufficient exploration, this algorithm incrementally collects tactile data and reconstructs 3D shapes of the objects as well, which can serve as a representation for higher-level downstream tasks. The method achieves an average of 95.97% IoU coverage on unseen YCB objects while just being trained on primitive shapes.

arXiv.org

Matthew S. Evanusa, Vaishnavi Patil, Michelle Girvan, Joel Goodman, Cornelia Fermüller, Yiannis Aloimonos

Robots are active agents that operate in dynamic scenarios with noisy sensors. Predictions based on these noisy sensor measurements often lead to errors and can be unreliable. To this end, roboticists have used fusion methods using multiple observations. Lately, neural networks have dominated the accuracy charts for percep- tion-driven predictions for robotic decision-making and often lack uncertainty metrics associated with the pre- dictions. Here, the authors present a mathematical formulation to obtain the heteroscedastic aleatoric uncertainty of any arbitrary distribution without prior knowledge about the data. The approach has no prior assumptions about the prediction labels and is agnostic to network architecture.

International Conference on Artificial Neural Networks (ICANN 2023) as compiled in International Conference on Artificial Neural Networks and Machine Learning by Springer

Nitin Sanket, Chahat Deep Singh, Cornelia Fermüller, Yiannis Aloimonos

Robots are active agents that operate in dynamic scenarios with noisy sensors. Predictions based on these noisy sensor measurements often lead to errors and can be unreliable. To this end, roboticists have used fusion methods using multiple observations. Lately, neural networks have dominated the accuracy charts for percep- tion-driven predictions for robotic decision-making and often lack uncertainty metrics associated with the pre- dictions. Here, the authors present a mathematical formulation to obtain the heteroscedastic aleatoric uncertainty of any arbitrary distribution without prior knowledge about the data. The approach has no prior assumptions about the prediction labels and is agnostic to network architecture.

Science Robotics

Daniel Deniz, Eduardo Ross, Cornelia Fermüller, Manuel Rodriguez-Alvarez, Francisco Barranco

Neuromorphic visual sensors are artificial retinas that output sequences of asynchronous events when brightness changes occur in the scene. These sensors offer many advantages including very high temporal resolution, no motion blur and smart data compression ideal for real-time process- ing. In this study, the authors introduce an event-based dataset on fine-grained manipulation actions and perform an experimental study on the use of transformers for action prediction with events. There is enormous interest in the fields of cognitive robotics and human-robot interaction on understanding and predicting human actions as early as possible. Early prediction allows anticipating complex stages for planning, enabling effective and real-time interaction. Their transformer network uses events to predict manipulation actions as they occur, using online inference.

arXiv.org

Chinmaya Devaraj, Cornelia Fermüller, Yiannis Aloimonos

GCN-based zero-shot learning approaches commonly use fixed input graphs representing external knowledge that usually comes from language. However, such input graphs fail to incorporate the visual domain nuances. The authors introduce a method to ground the external knowledge graph visually. The method is demonstrated on a novel concept of grouping actions according to a shared notion and shown to be of superior performance in zero-shot action recognition on two challenging human manipulation action datasets, the EPIC Kitchens dataset, and the Charades dataset. They further show that visually grounding the knowledge graph enhances the performance of GCNs when an adversarial attack corrupts the input graph.

Computer Vision Foundation workshop

Snehesh Shrestha, Ishan Tamrakar, Cornelia Fermüller, Yiannis Aloimonos

Haptic sensing can provide a new dimension to enhance people’s musical and cinematic experiences. However, designing a haptic pattern is neither intuitive nor trivial. Imagined haptic patterns tend to be different from experienced ones. As a result, researchers use simple step-curve patterns to create haptic stimul. Here, the authors designed and developed an intuitive haptic pattern designer that lets you rapidly prototype creative patterns. The simple architecture, wireless connectivity, and easy-to-program communication protocol make it modular and easy to scale. In this demo, workshop participants can select from a library of haptic patterns and design new ones. They can feel the pattern as they make changes in the user interface.

arXiv.org

Neal Anwar, Chethan Parameshwara, Cornelia Fermüller, Yiannis Aloimonos

Hyperdimensional Computing (HDC) is an emerging neuroscience-inspired framework wherein data of various modalities can be represented uniformly in high-dimensional space as long, redundant holographic vectors. When equipped with the proper Vector Symbolic Architecture (VSA) and applied to neuromorphic hardware, HDC-based networks have been demonstrated to be capable of solving complex visual tasks with substantial energy efficiency gains and increased robustness to noise when compared to standard Artificial Neural Networks (ANNs). Here, the authors present a bipolar HD encoding mechanism designed for encoding spatiotemporal data, which captures the contours of DVS-generated time surfaces created by moving objects by fitting to them local surfaces which are individually encoded into HD vectors and bundled into descriptive high-dimensional representations.

IEEE 2023 57th Annual Conference on Information Sciences and Systems (CISS)

Snehesh Shrestha, William Sentosatio, Huiashu Peng, Cornelia Fermüller, Yiannis Aloimonos

This work introduces FEVA, a video annotation tool with streamlined interaction techniques and a dynamic interface that makes labeling tasks easy and fast. FEVA focuses on speed, accuracy, and simplicity to make annotation quick, consistent, and straightforward.

arXiv.org

2022

Hussam Amrouch, Mohsen Imani, Xun Jiao, Yiannis Aloimonos, Cornelia Fermuller, Dehao Yuan, Dongning Ma, Hamza E. Barkam, Paul Genssler, Peter Sutor

Hyperdimensional Computing (HDC) is rapidly emerging as an attractive alternative to traditional deep learning algorithms. Despite the profound success of Deep Neural Networks (DNNs) in many domains, the amount of computational power and storage that they demand during training makes deploying them in edge devices very challenging if not infeasible. This, in turn, inevitably necessitates streaming the data from the edge to the cloud which raises serious concerns when it comes to availability, scalability, security, and privacy. Further, the nature of data that edge devices often receive from sensors is inherently noisy. However, DNN algorithms are very sensitive to noise, which makes accomplishing the required learning tasks with high accuracy immensely difficult. In this paper, we aim at providing a comprehensive overview of the latest advances in HDC.

2022 International Conference on Hardware/Software Codesign and System Synthesis (CODES+ISSS)

Peter Sutor, Dehao Yuan, Douglas Summers-Stay, Cornelia Fermüller, Yiannis Aloimonos

The authors explore the notion of using binary hypervectors to directly encode the final, classifying output signals of neural networks in order to fuse differing networks together at the symbolic level.

arXiv.org

Matthew Evanusa, Snehesh Shrestha, Vaishnavi Patil, Cornelia Fermüller, Michelle Girvan & Yiannis Aloimonos

Echo State Networks are a class of recurrent neural networks that can learn to regress on or classify sequential data by keeping the recurrent component random and training only on a set of readout weights, which is of interest to the current edge computing and neuromorphic community. However, they have struggled to perform well with regression and classification tasks and therefore, could not compete in performance with traditional RNNs, such as LSTM and GRU networks. To address this limitation, the authors have developed a hybrid network called Parallelized Deep Readout Echo State Network that combines the deep learning readout with a fast random recurrent component, with multiple ESNs computing in parallel.

SN Computer Science (Springer)

2021

Chethan M. Parameshwara, Simin Li, Cornelia Fermüller, Nitin J. Sanket, Matthew S. Evanusa, Yiannis Aloimonos

The researchers propose SpikeMS, the first deep encoder-decoder SNN architecture for the real-world large-scale problem of motion segmentation using the event-based DVS camera as input.

arXiv.org

2020

Matthew Evanusa, Cornelia Fermüller, Yiannis Aloimonos

The researchers present a novel Deep Reservoir Network for time series prediction and classification that learns through non-differentiable hidden reservoir layers using a biologically-inspired back propagation alternative. This alternative, called Direct Feedback Alignment, resembles global dopamine signal broadcasting in the brain. The researchers demonstrate its efficacy on two real-world multidimensional time series datasets.

arXiv.org

Matthew Evanusa, Cornelia Fermüller, Yiannis Aloimonos

The researchers show that a large, deep layered spiking neural network with dynamical, chaotic activity mimicking the mammalian cortex with biologically-inspired learning rules, such as STDP, is capable of encoding information from temporal data.

arXiv.org

Matthew Evanusa, Snehesh Shrestha, Michelle Girvan, Cornelia Fermüller, Yiannis Aloimonos

Demonstrates the use of a backpropagation hybrid mechanism for parallel reservoir computingwith a meta ring structure and its application on a real-world gesture recognition dataset. This mechanism can be used as an alternative to state of the art recurrent neural networks, LSTMs and GRUs.

arXiv.org

Eadom Dessalene, Michael Maynord, Chinmaya Devaraj, Cornelia Fermüller, Yiannis Aloimonos

Introduces Egocentric Object Manipulation Graphs (Ego-OMG):a novel representation for activity modeling and anticipation of near future actions.

arXiv.org

Anton Mitrokhin, Peter Sutor, Douglas Summers-Stay, Cornelia Fermüller, Yiannis Aloimonos

By using hashing neural networks to produce binary vector representations of images, the authors show how hyperdimensional vectors can be constructed such that vector-symbolic inference arises naturally out of their output.

Frontiers in Robotics and AI

Anton Mitrokhin, Zhiyuan Hua, Cornelia Fermüller, Yiannis Aloimonos

Presents a Graph Convolutional neural network for the task of scene motion segmentation by a moving camera. Describes spatial and temporal features of event clouds, which provide cues for motion tracking and segmentation.

Computer Vision Foundation

Chethan M. Parameshwara, Nitin J. Sanket, Arjun Gupta, Cornelia Fermüller, Yiannis Aloimonos

A solution to multi-object motion segmentation using a combination of classical optimization methods along with deep learning and does not require prior knowledge of the 3D motion and the number and structure of objects.

arXiv.org

John Kanu, Eadom Dessalene, Xiaomin Lin, Cornelia Fermüller, Yiannis Aloimonos

A novel robotic agent framework for learning to perform temporally extended tasks using spatial reasoning in a deep reinforcement learning framework, by sequentially imagining visual goals and choosing appropriate actions to fulfill imagined goals.

arXiv.org

2022

L. A. Prashanth, Michael Fu

Prashanth and Fu are the editors of this volume in the Foundations and Trends® in Machine Learning series by now Publishers Inc. This publication includes sections on Markov decision processes (MDPs), risk measures, background on policy evaluation and gradient estimation, policy gradient templates for risk-sensitive RL, MDPs with risk as the constraint, and MDPs with risk as the objective.

Foundations and Trends® in Machine Learning series

2019

Michael Fu

The deep neural networks of AlphaGo and AlphaZero can be traced back to an adaptive multistage sampling (AMS) simulation-based algorithm for Markov decision processed published by HS Chang, Michael C. Fu and Steven I Marcus in Operations Research in 2005. Here, Fu retraces history, talks about the impact of the initial research, and suggests enhancements for the future.

Asian-Pacific Journal of Operational Research

2023

Sangeeth Balakrishnan, Francis VanGessel, Brian Barnes, Ruth Doherty, William Wilson, Zois Boukouvalas, Mark Fuge, Peter Chung

Data-driven machine learning techniques can be useful for the rapid evaluation of material properties in extreme environments, particularly in cases where direct access to the materials is not possible. Such problems occur in high-throughput material screening and material design approaches where many candidates may not be amenable to direct experimental examination. In this paper, the authors perform an exhaustive examination of the applicability of machine learning for the estimation of isothermal shock compression properties, specifically the shock Hugoniot, for diverse material systems. A comprehensive analysis is conducted where effects of scarce data, variances in source data, feature choices, and model choices are systematically explored. New modeling strategies are introduced based on feature engineering, including a feature augmentation approach, to mitigate the effects of scarce data. The findings show significant promise of machine learning techniques for design and discovery of materials suited for shock compression applications.

Journal of Applied Physics

2022

Jesse Hearn, Sangeeth Balakrishnan, Francis VanGessel, Zois Boukouvalas, Brian Barnes, Ian Michel-Tyler, Ruth Doherty, William Wilson, William Durant, Mark Fuge, Peter Chung

High energy density polymeric binders are a class of polymer materials that can be used in lieu of inert binders in high energy density mixtures. By using higher energy binders, the overall internal energy of the mixture can be designed intentionally and proactively. This paper presents recent efforts to develop a machine learning approach to learn, predict, and design novel energetic polymers. The scarcity of data available for energetic polymers is a particular challenge that the authors overcome through transfer learning techniques. Generally-speaking, transfer learning is a class of machine learning algorithm that assists the learning of general trends within one dataset using alternate datasets. In their approach, the researchers use a feature transfer learning approach based on low-level physiochemical data that may be obtained for any molecule.

APS 22nd Biennial Conference of the APS Topical Group on Shock Compression of Condensed Matter

Connor O'Ryan, Francis VanGessel, Zois Boukouvalas, Mark Fuge, Peter Chung, Ian Michel-Tyler, Ruth Doherty, William Wilson, Kevin Hayes

Within the past two decades machine-learning algorithms have seen diverse development and implementation in a variety of domains, including those related to shock compression. These developments include advances in computationally assisted synthesis planning and natural language processing for text documents in the context of chemical energy. The objective of this work is to explore the intersection of these emergent research capabilities and develop automatable approaches for extracting synthesis information for chemical storage from text documents to create novel representations via knowledge graphs.

APS 22nd Biennial Conference of the APS Topical Group on Shock Compression of Condensed Matter

Sangeeth Balakrishnan, Francis VanGessel, Zois Boukouvalas, Brian Barnes, Mark Fuge, Peter Chung

Deep learning has shown a high potential for generating molecules with desired properties. But generative modeling can often lead to novel, speculative molecules whose synthesis routes are not obvious. Moreover, the cost and time required to calculate or measure high energy properties have restricted the available data set sizes for this class of materials, thereby limiting the usefulness of deep learning-based methods. As a solution to this problem, the authors propose a deep learning-based method that fuses data from multiple molecule classes, effectively enabling the learning and designing of high energy molecules with the assistance of data for general organic molecules, which tend to be available in massive databases.

APS 22nd Biennial Conference of the APS Topical Group on Shock Compression of Condensed Matter

Allen Garcia, Connor O'Ryan, Gaurav Kumar, Zois Boukouvalas, Mark Fuge, Peter Chung

This paper tests whether statistical relationships exist between the language used to discuss energetic materials and their fundamental physicochemical properties. A surprising and remarkable degree of statistical equivalence is found, in some cases showing >90% confidence levels. This work posits a new means for using automated machine-assisted approaches to learn from technical documents and facilitate the search and discovery of new materials.

APS 22nd Biennial Conference of the APS Topical Group on Shock Compression of Condensed Matter

2021

Qiuyi Chen, Phillip Pope, Mark Fuge

The manifold hypothesis forms a pillar of many modern machine learning techniques. Within the context of design, it proposes that valid designs reside on low dimensional manifolds in the high dimensional design spaces. Here, the authors present the optimal-transport-based sibling of their previous work, BézierGAN, that surpasses its predecessor in terms of both manifold approximating precision and learning speed. They also provide methodology that helps determine the intrinsic dimension of the design manifold beforehand.

AIAA SciTech Forum 2022

Arthur Drake, Qiuyi Chen, Mark Fuge

Complex engineering problems such as compressor blade optimization often require large amounts of data and computational resources to produce optimal designs because traditional approaches only operate in the original high-dimensional design space. To mitigate this issue, the authors develop a simple yet effective autoencoder architecture that operates on a prior ε-frontier from examples of past optimization trajectories. This paper focuses on using such non-linear methods to maximize dimensionality reduction on an easily verifiable synthetic dataset, providing a faster alternative to high-fidelity simulation techniques.

aaas.org

2020

Xiaolong Liu, Seda Aslan, Rachel Hess, Paige Mass, Laura Olivieri, Yue-Hin Loke, Narutoshi Hibino, Mark Fuge, Axel Krieger

Develops a computational framework for automatically designing optimal shapes of patient-specific TEVGs for aorta surgery.

42nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society

Wei Chen, Mark Fuge

The authors propose a Bayesian optimization approach that only needs to specify an initial search space that does not necessarily include the global optimum, and expands the search space when necessary.

arXiv.org

2019

Eliot Rudnick-Cohen, Shapour Azarm and Jeffrey Herrmann

The paper presents Scenario Generation and Local Refinement Optimization (SGLRO), a new approach for solving non-convex robust optimization problems.

Journal of Mechanical Design

2023

Ashish Seth, Sreyan Ghosh, S. Umesh, Dinesh Manocha

Continued pre-training (CP) offers multiple advantages, like target domain adaptation and the potential to exploit the continuous stream of unlabeled data available online. However, continued pre-training on out-of-domain distributions often leads to catastrophic forgetting of previously acquired knowledge, leading to sub-optimal ASR performance. This paper presents FusDom, a simple and novel methodology for SSL-based continued pre-training. /p>

arXiv.org

Ashish Seth, Sreyan Ghosh, S. Umesh, Dinesh Manocha

Continued self-supervised (SSL) pre-training for adapting existing SSL models to the target domain has shown to be extremely effective for low-resource Automatic Speech Recognition (ASR). This paper proposes Stable Distillation, a simple and novel approach for SSL-based continued pre-training that boosts ASR performance in the target domain where both labeled and unlabeled data are limited.

arXiv.org

Puneet Mathur, Zhe Liu, Ke Li, Yingyi Ma, Gil Keren, Zeeshan Ahmed, Dinesh Manocha, Xuedong Zhang

PersonaLM is a domain-distributed, span-aggregated K-nearest N-gram retrieval augmentation to improve language modeling for Automatic Speech Recognition personalization.

Findings of the Association for Computational Linguistics: EMNLP 2023

Anton Ratnarajah, Sreyan Ghosh, Sonal Kumar, Purva Chiniya, Dinesh Manocha

The choice of input text prompt plays a critical role in the performance of Vision-Language Pretrained (VLP) models such as CLIP. Apollo is a unified multi-modal approach that combines Adapter and Prompt learning for Vision-Language models. The method is designed to substantially improve the generalization capabilities of VLP models when they are fine-tuned in a few-shot setting.

arXiv.org

Anton Ratnarajah, Sreyan Ghosh, Sonal Kumar, Purva Chiniya, Dinesh Manocha

Accurate estimation of Room Impulse Response (RIR), which captures an environment’s acoustic properties, is im- portant for speech processing and AR/VR applications. The authors propose AV-RIR, a novel multi-modal multi-task learning approach to accurately estimate the RIR from a given reverberant speech signal and the visual cues of its corresponding environment.

arXiv.org

Divya Kothandaraman, Tianyi Zhou, Ming Lin, Dinesh Manocha

AerialBooth synthesizes the aerial view from a single input image using its text description. The authors leverage the pretrained text-to-2D image stable diffusion model as prior knowledge of the 3D world. The model is finetuned in two steps to optimize for the text embedding and the UNet that reconstruct the input image and its inverse perspective mapping respectively. The inverse perspective mapping creates variance within the text-image space of the diffusion model, while providing weak guidance for aerial view synthesis. AerialBooth achieves the best viewpoint-fidelity trade-off though quantitative evaluation on 7 metrics analyzing viewpoint and fidelity w.r.t. input image.

arXiv.org

Divya Kothandaraman, Tianyi Zhou, Ming Lin, Dinesh Manocha

Aerial Diffusion generates aerial views from a single ground-view image using text guidance. Aerial Diffusion leverages a pretrained text-image diffusion model for prior knowledge. The authors address two main challenges corresponding to domain gap between the ground-view and the aerial view and the two views being far apart in the text-image embedding manifold. The approach uses a homography inspired by inverse perspective mapping prior to finetuning the pretrained diffusion model. Aerial Diffusion is the first approach that performs single image ground-to-aerial translation in an unsupervised manner.

SIGGRAPH Asia 2023 Technical Communications

Sreyan Ghosh, Ashish Seth, Sonal Kumar, Utkarsh Tyagi, Chandra Kiran Reddy Evuru, S. Ramaneswaran, S. Sakshi, Oriol Nieto, Ramani Duraiswami, Dinesh Manocha

Audio-language models (ALMs) trained using a contrastive approach (e.g., CLAP) that learns a shared representation between audio and language modalities have improved performance in many downstream applications, including zero-shot audio classification, audio retrieval, etc. However, the ability of these models to effectively perform compositional reasoning remains largely unexplored and necessitates additional research. Here, the authors propose CompA, a collection of two expert-annotated benchmarks, with a majority of real-world audio samples, to evaluate compositional reasoning in ALMs. The proposed CompA-order evaluates how well an ALM understands the order or occurrence of acoustic events in audio, and CompA-attribute evaluates attribute binding of acoustic events.

arXiv.org

Biao Jia, Dinesh Manocha

Developing proficient brush manipulation capabilities in real-world scenarios is a complex and challenging endeavor, with wide-ranging applications in fields such as art, robotics, and digital design. Here, the authors introduce an approach designed to bridge the gap between simulated environments and real-world brush manipulation. The framework leverages behavior cloning and reinforcement learning to train a painting agent, seamlessly integrating it into both virtual and real-world environments.

arXiv.org

Sreyan Ghosh, Sonal Kumar, Chandra Kiran Reddy Evuru, Ramani Duraiswami, Dinesh Manocha

RECAP is effective audio captioning system that generates captions conditioned on an input audio and other captions similar to the audio retrieved from a datastore. Additionally, our proposed method can transfer to any domain without the need for any additional fine-tuning.

arXiv.org

Puneet Mathur, Rajiv Jain, Jiuxiang Gu, Franck Dernoncourt, Dinesh Manocha, Vlad Morariu

Proposes a new task of language-guided localized document editing, where the user provides a document and an open vocabulary editing request, and the intelligent system produces a command that can be used to automate edits in real-world document editing software.

37th AAAI Conference on Artificial Intelligence (AAAI-23)

Dinesh Manocha, Zherong Pan

AdVerb is a novel audio-visual dereverberation framework that uses visual cues in addition to the reverberant sound to estimate clean audio. Although audio-only dereverberation is a well-studied problem, the researchers' approach incorporates the complementary visual modality to perform audio dereverberation.

SIGGRAPH 2017 (ACM SIGRAPH Information and Artifacts History website)

Sanjoy Chowdhury, Sreyan Ghosh, Subhrajyoti Dasgupta, Anton Ratnarajah, Utkarsh Tyagi, Dinesh Manocha

AdVerb is a novel audio-visual dereverberation framework that uses visual cues in addition to the reverberant sound to estimate clean audio. Although audio-only dereverberation is a well-studied problem, the researchers' approach incorporates the complementary visual modality to perform audio dereverberation.

arXiv.org

Sreyan Ghosh, Chandra Kiran Reddy Evuru, Sonal Kumar, Utkarsh Tyagi, Sakshi Singh, Sanjoy Chowdhury, Dinesh Manocha

Neural image classifiers can often learn to make predictions by overly relying on non-predictive features that are spuriously correlated with the class labels in the training data. This leads to poor performance in real-world atypical scenarios where such features are absent. Supplementing the training dataset with images without such spurious features can aid robust learning against spurious correlations via better generalization. This paper presents ASPIRE (Language-guided data Augmentation for SPurIous correlation REmoval), a simple yet effective solution for expanding the training dataset with synthetic images without spurious features.

arXiv.org

Souradip Chakraborty, Amrit Singh Bedi, Alec Koppel, Dinesh Manocha, Furong Huang, Mengdi Wang

consider a bilevel optimization problem and connect it to a principal-agent framework, where the principal specifies the broader goals and constraints of the system at the upper level and the agent solves a Markov Decision Process (MDP) at the lower level. They propose Principal driven Policy Alignment via Bilevel RL (PPA-BRL), which efficiently aligns the policy of the agent with the principal’s goals.

Interactive Learning with Implicit Human Feedback Workshop; ICML 2023

Souradip Chakraborty, Amrit Singh Bedi, Alec Koppel, Dinesh Manocha, Furong Huang, Mengdi Wang

The authors consider a bilevel optimization problem and connect it to a principal-agent framework, where the principal specifies the broader goals and constraints of the system at the upper level and the agent solves a Markov Decision Process (MDP) at the lower level.

Interactive Learning with Implicit Human Feedback Workshop; ICML 2023

Puneet Mathur, Mihir Goyal, Ramit Sawhney, Ritik Mathur, Jochen L. Leidner, Franck Dernoncourt, Dinesh Manocha

Financial prediction is complex due to the stochastic nature of the stock market. Semi-structured financial documents present comprehensive financial data in tabular formats, and can often contain more than 100s tables worth of technical analysis along with a textual discussion of corporate history, and management analysis, compliance, and risks. Existing research focuses on the textual and audio modalities of financial disclosures from company conference calls to forecast stock volatility and price movement, but ignores the rich tabular data available in financial reports. Moreover, the economic realm is still plagued with a severe under-representation of various communities spanning diverse demographics, gender, and native speakers. In this work, the authors show that combining tabular data from financial semi-structured documents with text transcripts and audio recordings not only improves stock volatility and price movement prediction by 5-12% but also reduces gender bias caused due to audio-based neural networks by over 30%.

Findings of the Association for Computational Linguistics (EMNLP 2022)

Puneet Mathur, Rajiv Jain, Ashutosh Mehra, Jiuxiang Gu, Franck Dernoncourt, Anandhavelu N, Quan Tran, Verena Kaynig-Fittkau, Ani Nenkova, Dinesh Manocha, Vlad I. Morariu

LayerDoc is an approach that uses visual features, textual semantics, and spatial coordinates along with constraint inference to extract the hierarchical layout structure of documents in a bottom-up, layer-wise fashion.

Winter Conference on Applications of Computer Vision (WACV) 2023, The Computer Vision Foundation

Divya Kothandaraman, Sumit Shekhar, Abhilasha Sanchetil, Manoj Ghuhan, Tripti Shukla, Dinesh Manocha

SALAD is a method for the challenging vision task of adapting a pre-trained “source” domain network to a “target” domain, with a small budget for annotation in the “target” domain and a shift in the label space.

Winter Conference on Applications of Computer Vision (WACV) 2023, The Computer Vision Foundation

Chunxiao Cao, Zili An, Zhong Ren, Dinesh Manocha, Kun Zhao

This work introduces bidirectional edge diffraction response function (BEDRF), a new approach to model wave diffraction around edges with path tracing. The diffraction part of the wave is expressed as an integration on path space, and the wave-edge interaction is expressed using only the localized information around points on the edge similar to a bidirectional scattering distribution function (BSDF) for visual rendering. For an infinite single wedge, the authros' model generates the same result as the analytic solution. This approach can be easily integrated into interactive geometric sound propagation algorithms that use path tracing to compute specular and diffuse reflections.

arXiv.org

Sreyan Ghosh, Utkarsh Tyagi, Manan Suri, Sonal Kumar, S. Ramaneswaran, Dinesh Manocha

Complex Named Entity Recognition (NER) is the task of detecting linguistically complex named entities in low-context text. In this paper, the authors present ACLM (Attention-map aware keyword selection for Conditional Language Model fine-tuning), a novel data augmenta- tion approach, based on conditional generation, to address the data scarcity problem in low- resource complex NER. ACLM alleviates the context-entity mismatch issue, a problem exist- ing NER data augmentation techniques suffer from and often generates incoherent augmentations by placing complex named entities in the wrong context. ACLM builds on BART and is optimized on a novel text reconstruction or de-noising task. They use selective masking (aided by attention maps) to retain the named entities and certain keywords in the input sentence that provide contextually relevant additional knowledge or hints about the named entities. Compared with other data augmentation strategies, ACLM can generate more diverse and coherent augmentations preserving the true word sense of complex entities in the sentence. They demonstrate the effectiveness of ACLM both qualitatively and quantitatively on monolingual, cross-lingual, and multilingual complex NER across various low-resource settings. ACLM outperforms all our neural baselines by a significant margin (1%-36%). In addition, they demonstrate the application of ACLM to other domains that suffer from data scarcity (e.g., biomedical). In practice, ACLM generates more effective and factual augmentations for these domains than prior methods.

arXiv.org

Xijun Wang, Ruiqi Xian, Tianrui Guan, Dinesh Manocha

A new general learning approach for action recognition, Prompt Learning for Action Recognition (PLAR), which leverages the strengths of prompt learning to guide the learning process.

arXiv.org

Sreyan Ghosh, Sonal Kumar, Utkarsh Tyagi, Dinesh Manocha

Biomedical Named Entity Recognition (BioNER) is the fundamental task of identifying named entities from biomedical text. However, BioNER suffers from severe data scarcity and lacks high-quality labeled data due to the highly specialized and expert knowledge required for annotation. Here, the authors present BioAug, a novel data augmentation framework for low-resource BioNER. BioAug, built on BART, is trained to solve a novel text reconstruction task based on selective masking and knowledge augmentation.

arXiv.org

Elizabeth Childs, Ferzam Mohammad, Logan Stevens, Hugo Burbelo, Amanuel Awoke, Nicholas Rewkowski, Dinesh Manocha

Although distance learning presents a number of interesting educational advantages as compared to in-person instruction, it is not without its downsides. Here the authors first assess the educational challenges presented by distance learning as a whole and identify 4 main challenges that distance learning currently presents as compared to in-person instruction: the lack of social interaction, reduced student engagement and focus, reduced comprehension and information retention, and the lack of flexible and customizable instructor resources. After assessing each of these challenges in-depth, they examine how AR/VR technologies might serve to address each challenge along with their current shortcomings, and finally outline the further research that is required to fully understand the potential of AR/VR technologies as they apply to distance learning.

IEEE Transactions on Visualization and Computer Graphics

Anton Ratnarajah, Dinesh Manocha

An end-to-end binaural audio rendering approach (Listen2Scene) for virtual reality (VR) and augmented reality (AR) applications. The authors propose a novel neural-network-based binaural sound propagation method to generate acoustic effects for 3D models of real environments. Any clean audio or dry audio can be convolved with the generated acoustic effects to render audio corresponding to the real environment. They also include a graph neural network that uses both the material and the topology information of the 3D scenes and generates a scene latent vector, and a conditional generative adversarial network (CGAN) to generate acoustic effects from the scene latent vector.

Harvard University Astrophysics Data System

Souradip Chakraborty, Amrit Singh Bedi, Sicheng Zhu, Bang An, Dinesh Manocha, Furon Huang