News Story

NSF funds Shamma, Espy-Wilson for neuromorphic and data-driven speech segregation research

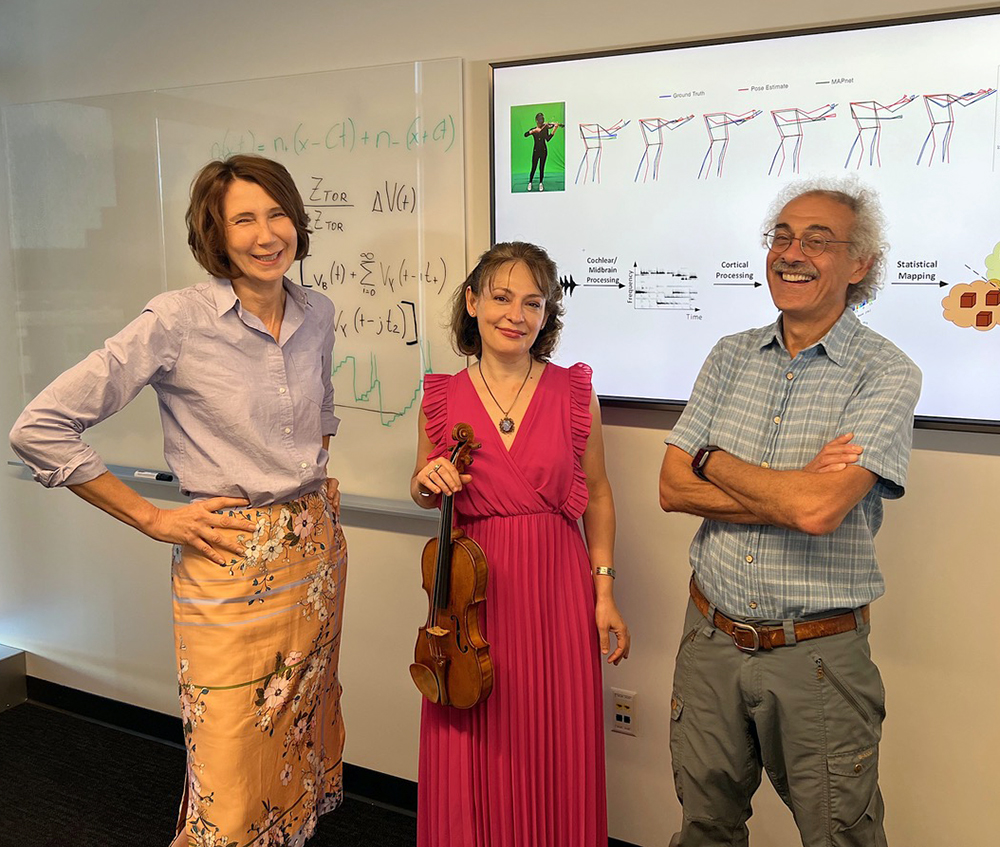

Professor Shihab Shamma (ECE/ISR) is the principal investigator and Professor Carol Espy-Wilson (ECE/ISR) is the co-PI for a new three-year, $851K National Science Foundation Information and Intelligent Systems award, Neuromorphic and Data-Driven Speech Segregation.

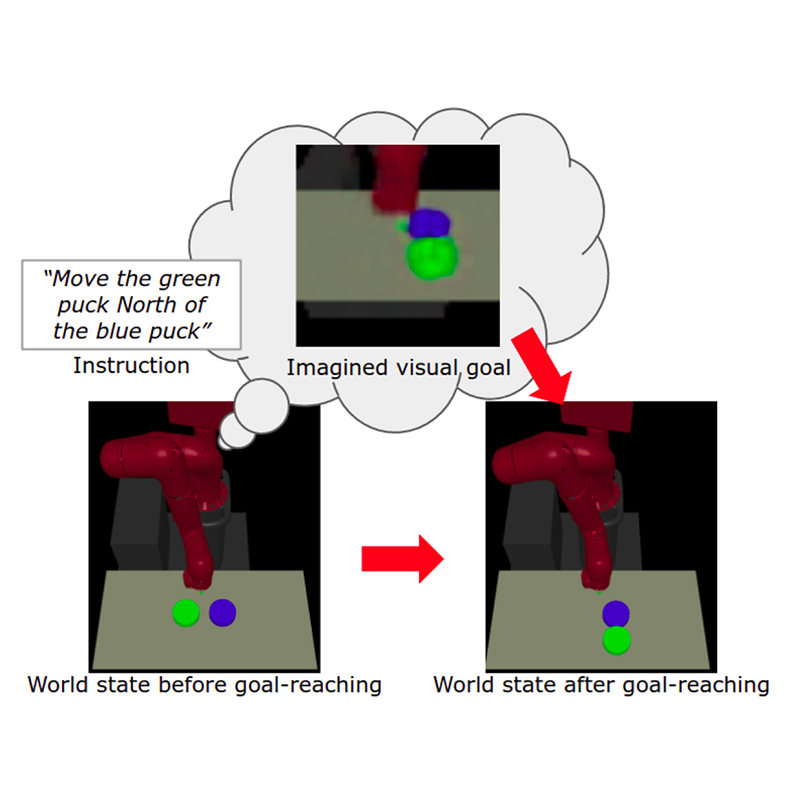

Their research will investigate how the auditory cortex processes of the brain can be adapted, mimicked and applied to address the artificial intelligence (AI) signal processing challenge of robust perception in extremely noisy and cluttered environments. The neural representations of speech and music are of particular interest. The project will formulate algorithms inspired by the architecture of the brain to segregate and track targeted speakers or sound sources, test their performance, and relate them to state-of-the-art approaches that utilize deep artificial neural networks to accomplish these tasks.

Human psychoacoustic and physiological experiments with these algorithms will be conducted to test the validity of these ideas for mimicking human abilities. This effort will spur the development of new neuromorphic computational tools modeled after the brain and its cognitive functions. In turn, these will provide a theoretical framework to guide future experiments into how complex cognitive functions originate and how they influence sensory perception and lead to robust behavioral performance.

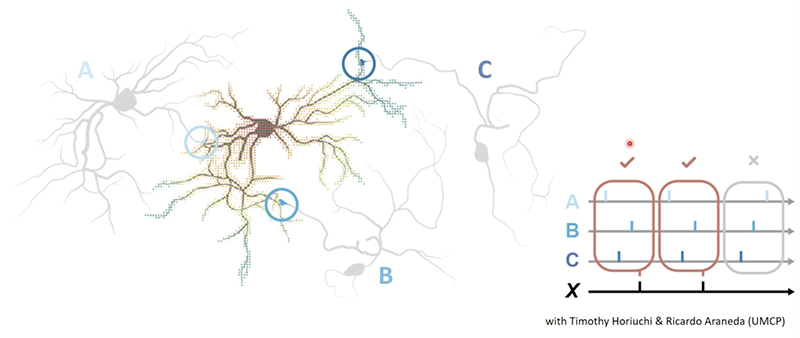

The research will proceed along two tracks. The first attempts to borrow from existing neuromorphic approaches that rely on cortical representations to develop new embeddings within a deep neural networks (DNN) framework. This will endow the AI framework with brain-like robustness in challenging unanticipated environments. Three specific efforts here include: learning DNN embeddings using cortical representations of speech and music, exploring unsupervised clustering of cortical features using adversarial auto-encoders, and exploiting pitch and timbre representations to enhance segregation of sound.

The second research track borrows from the DNN approach to build performance and flexibility into neuromorphic algorithms. This will be attained by training on available databases. Two broad areas of studies are planned. One focuses on questions of neuromorphic implementations that benefit from DNN toolboxes and ideas, especially in segregation and reconstruction. The other focuses on investigating how autoencoders can be exploited to efficiently implement feature reduction and clustering.

Published September 25, 2018